| How to set compile options |

|---|

Solution Explorer -> right drag on the project name (KinectV2) -> Properties -> Configuration Properties -> C/C++ -> Command Line -> Additional Options -> /Zc:strictStrings- |

Prerequisite necessary items

The following settings are required to perform speech recognition with Kinect V2. Especially, there are many cases where the program does not operate properly by including another "sapi.h" somewhere in the system, so please pay attention to Visual Studio project settings.

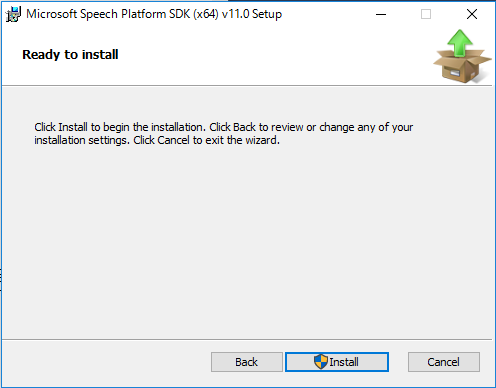

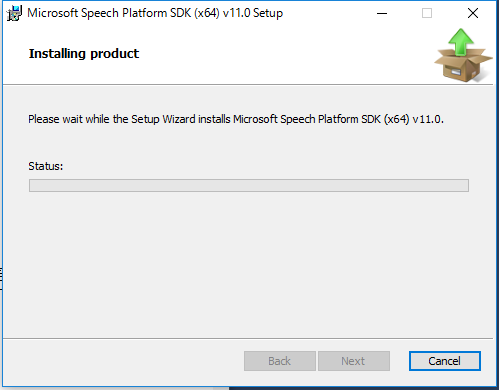

- Install Kinect V2's Speech Recognition SDK "Speech Platform SDK v11" http://www.microsoft.com/en-us/download/details.aspx?id=27226

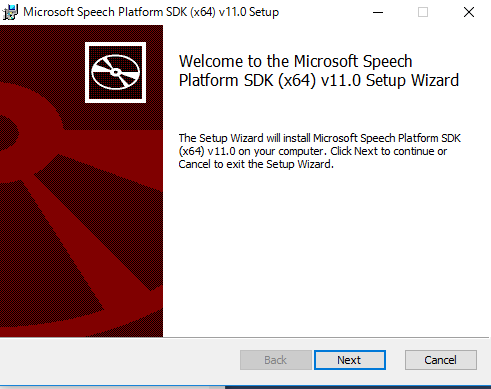

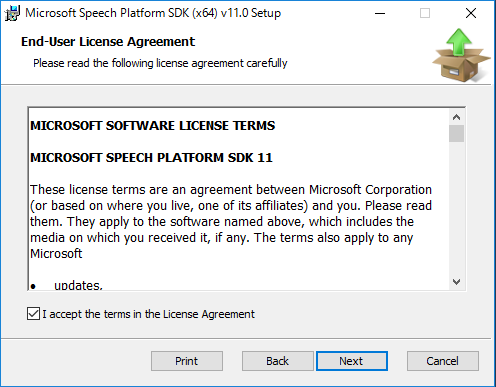

64bit version x64_MicrosoftSpeechPlatformSDK\MicrosoftSpeechPlatformSDK.msi

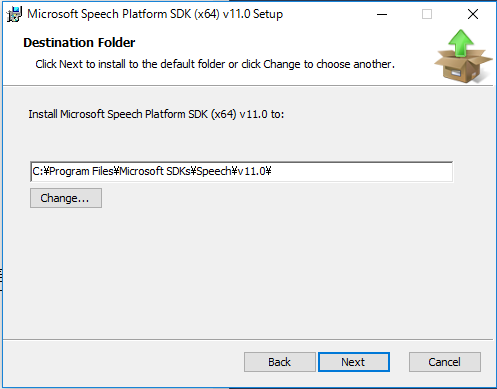

Clicking on the downloaded msi file and executing it, the Speech SDK software is installed at "C:\Program Files\Microsoft SDKs\Speech\v11.0" .

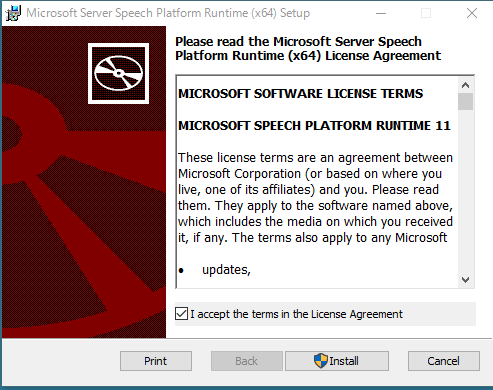

Click the following msi file from the folder of the Speech SDK you installed earlier and execute it.

C:\Program Files\Microsoft SDKs\Speech\v11.0\Redist\SpeechPlatformRuntime.msi

MSKinectLangPack_enUS.msi English (US) MSKinectLangPack_jaJP.msi Japanese (Japan)

Select and download mis files. Click them to install both models.

Please note that there are many "sapi.h" files in other folders with a higher priority.

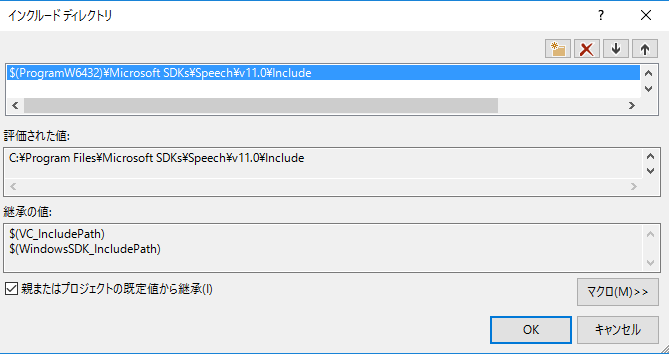

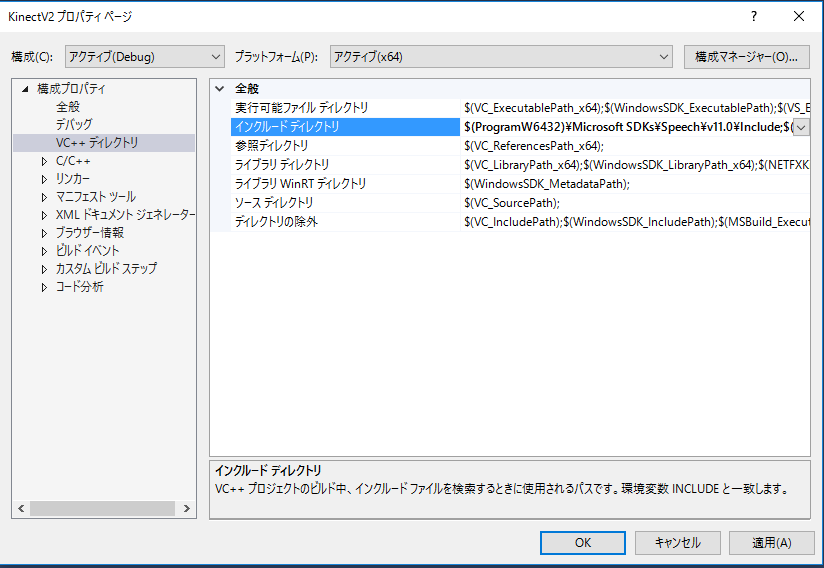

Add the Speech SDK include path $(ProgramW6432)\Microsoft SDKs\Speech\v11.0\Include;

to the first place of the project's include directories.

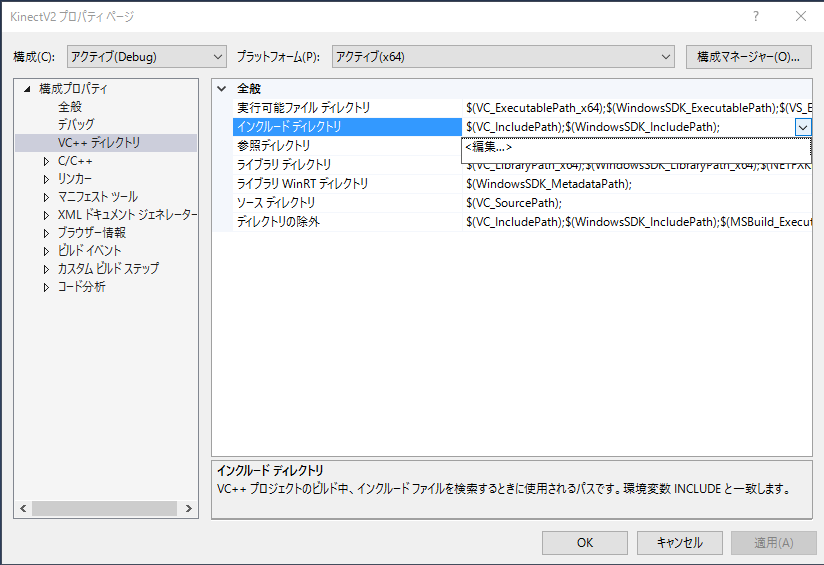

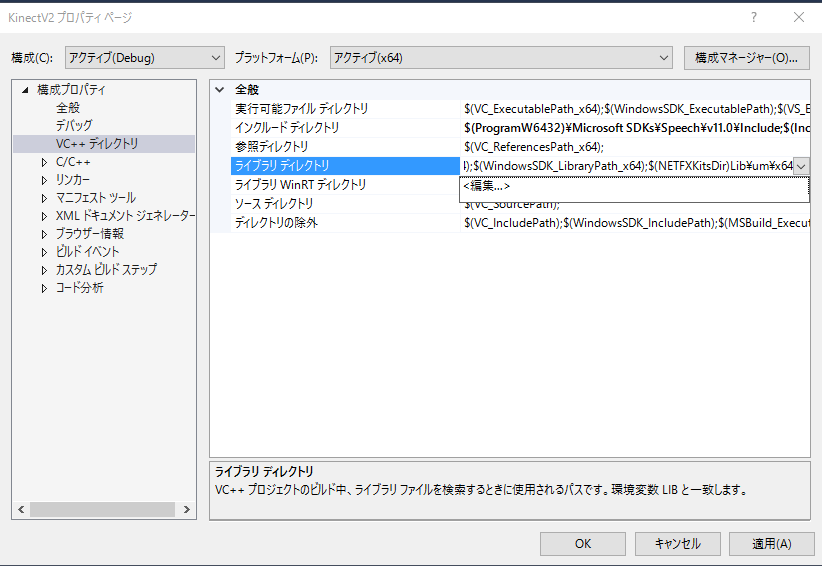

"Properies" -> "Configuration Properties" -> "VC++ directories" ->

"Include directory" -> Add $(ProgramW6432)\Microsoft SDKs\Speech\v11.0\Include; to the beginning of characters.

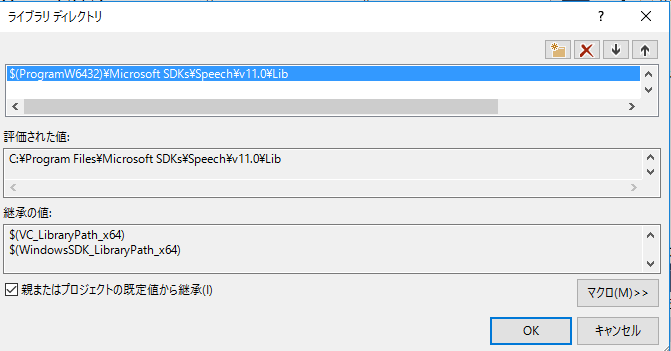

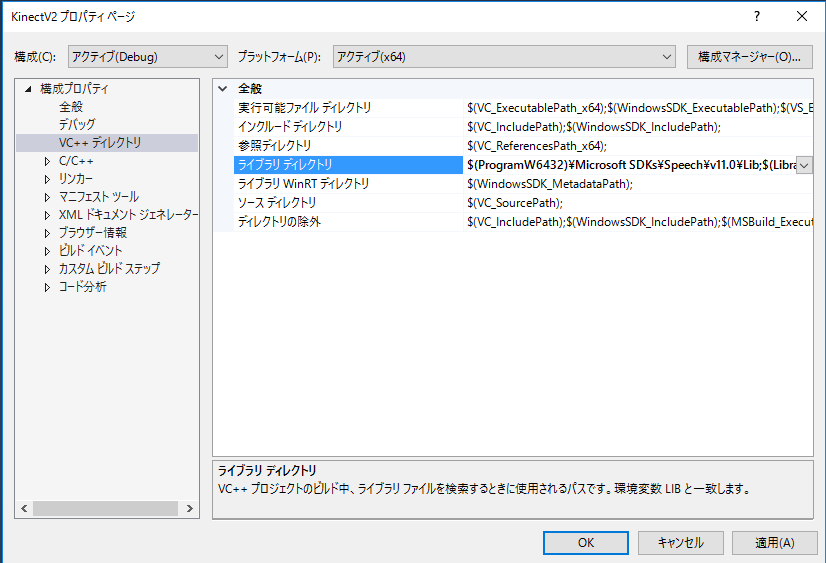

Add the Speech SDK library path

$(ProgramW6432)\Microsoft SDKs\Speech\v11.0\Lib;

to the first place of the project's library directories.

"Properies" -> "Configuration Properties" -> "VC++ directories" ->

"Library directory" -> Add $(ProgramW6432)\Microsoft SDKs\Speech\v11.0\Lib; to the beginning of characters.

If the correct sapi.h is not included or the correct sapi.lib is not linked, the program will stop and show the following error messages.

This sample was compiled against an incompatible version of sapi.h. Please ensure that Microsoft Speech SDK and other sample requirements are installed and then rebuild application. |

failed SpFindBestToken (... abbreviated ...) |

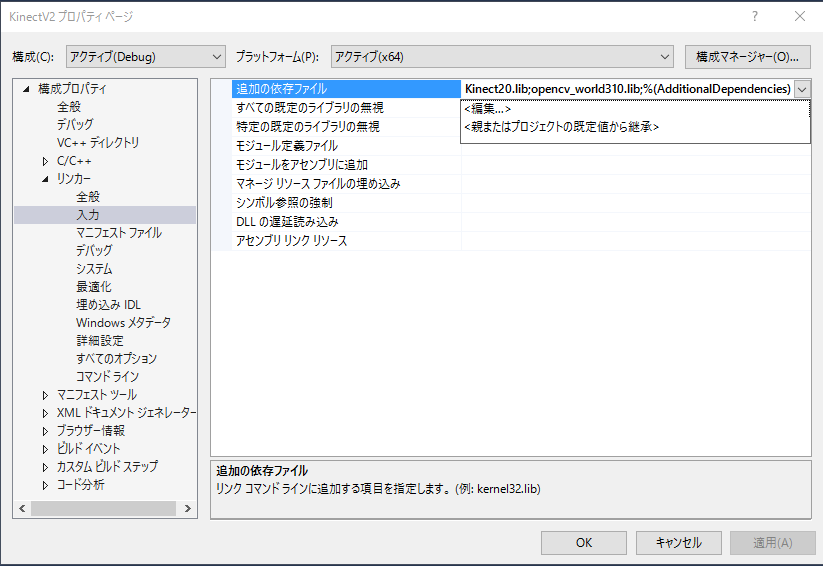

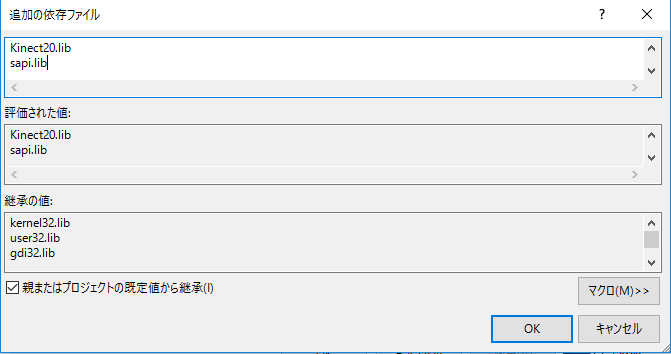

"Configuration properties" -> "Linker" -> "Input" -> "Additional dependency files" -> Add sapi.lib

Recognizing Speech

If you define USE_SPEECH constant before including NtKinect.h, the functions and variables of NtKinect for recognizing speech become effective.

#define USE_SPEECH #include "NtKinect.h" ...

NtKinect's functions for Speech Recognition

| type of return value | function name | descriptions | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| void | setSpeechLang(string& lang , wstring& grxml ) | Specify laguage lang and Word file grxml to recognize. When this function is not called, the default values are "ja-JP" and L"Grammar_jaJP.grxml", respectively. |

|||||||||||||||

| void | startSpeech() | Start speech recognition. | |||||||||||||||

| void | stopSpeech() | Stop speech recognition. | |||||||||||||||

| bool | setSpeech() | Called after startSpeech(), this function tries speech recognition on the most recent audio data and set the values of the following member variables.

|

NtKinect's member variables for Speech Recognition

| type | variable name | descriptions |

|---|---|---|

| bool | recognizedSpeech | Flag indicating whether speech recognition is successful. It is true if speechConfidence is over speechThreshold. |

| wstring | speechTag | When the speech can be recognized, "tag" of the word is set. This "tag" is the value specified by tag field in the grxml file. |

| wstring | speechItem | When the speech can be recognized, "item" of the word is set. This "item" is the value specified by item field in the grxml file. |

| float | speechConfidence | Confidence of the result of recognized speech. When this values is larger than confidenceThreshold , it is judged that the speech can be recognized. |

| float | confidenceThreshold | Threshold value to recognize speech. You can set the value by assigning directly to the variabe. The default value is 0.3. |

How to write program

- Install KinectV2's speech recognition SDK "Speech Platform SDK v11". http://www.microsoft.com/en-us/download/details.aspx?id=27226

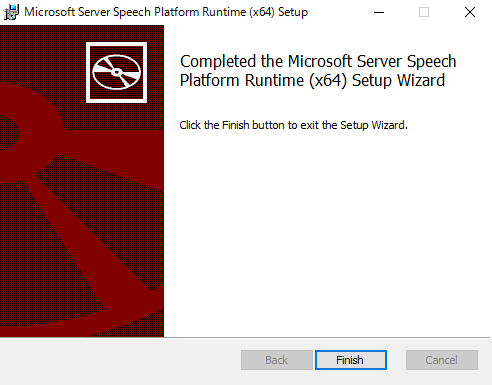

- In addition, install the Runtime of Speech SDK.

- Download and install the acoustic model prepared for each language. http://www.microsoft.com/en-us/download/details.aspx?id=43662

- Restart Windows after installing "Speech SDK" itself, "Speech SDK Runtime", and two acoustic models of KinectLangPack, "enUS" and "jaJP".

- Start using the Visual Studio's project KinectV2_audio.zip of " NtKinect: How to get and record audio with Kinect V2 " .

- Prepare a file describing the words you want to recognize for each language.

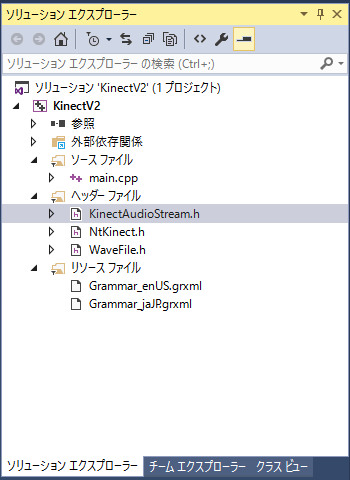

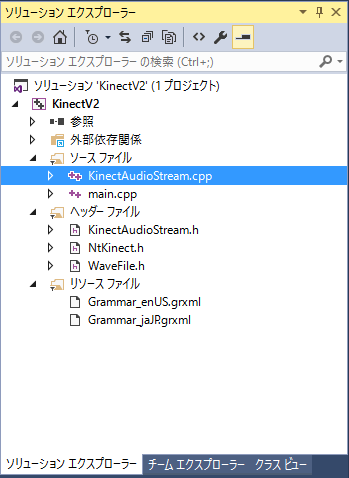

- Add KinectAudioStream.h and KinectAudioStream.cpp to the project.

- Change the projct settings.

- Change the contents of main.cpp as follows.

- When you run the program, RGB images are displayed. Exit with 'q' key.

- Please click here for this sample project KinectV2_speech.zip。

- Let's try it.

- Let's use Kinect V2 speech recognition with Unity. Please see here for how to do it.

64bit version x64_MicrosoftSpeechPlatformSDK\MicrosoftSpeechPlatformSDK.msi

Click the downloaded msi file and install it.

Click on the following msi file created by installing Speech SDK, and execute it.

C:\Program Files\Windows\SDKs\Speech\v11.0\Redist\SpeechPlatformRuntime.msi

MSKinectLangPack_enUS.msi English (US) MSKinectLangPack_jaJP.msi Jpanaese (JP)

Click on the two downloaded msi files and execute them.

Restart may not be necessary, but just in case.

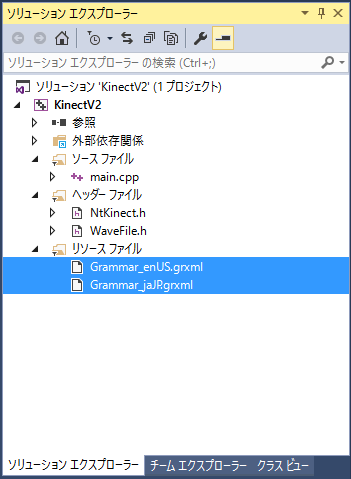

It is Japanese to recognize this time, but let's add both files to the project, considering changing to make English recognize in the future.

| File for usEN : | Grammar_enUS.grxml |

| File for jaJP : | Grammar_jaJP.grxml |

First, place the grxml file in the project's source folder, in this case "KinectV2_speech/KinectV2/". Then, right-click on "Resource file" in "Solution Explorer" and select "Existing item" from "Add".

When editing these files, let's save with UTF-8 format. For grammar specifications, please refer to https://www.w3.org/TR/speech-grammar/ .

First, copy KinectAudioStream.h and KinectAudioStream.cpp to the project's source folder, in this case "KinectV2_speech/KinectV2/". Then, right-click on "Resource file" in "Solution Explorer" and select "Existing item" from "Add".

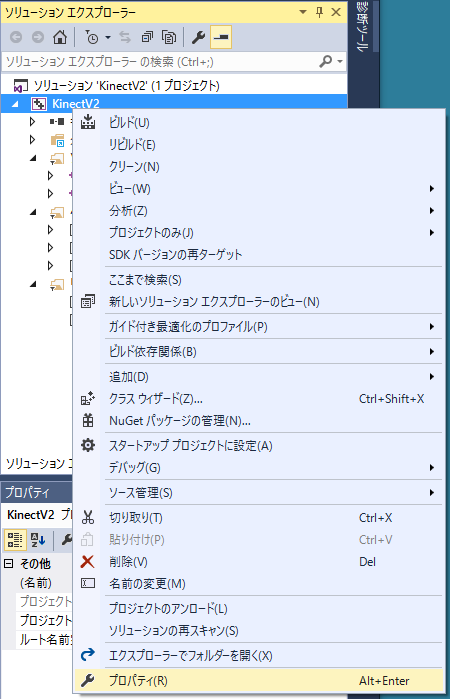

Right-click on "Project name (here KinectV2)" from "Solution Explorer" and select "Properties" from the menu.

Add the Speech SDK include path $(ProgramW6432)\Microsoft SDKs\Speech\v11.0\Include;

to the first place of the project's include directories.

"Configuration Properties" -> "VC++ directories" ->

"Include directory" -> Add $(ProgramW6432)\Microsoft SDKs\Speech\v11.0\Include; to the beginning of characters.

Add the Speech SDK library path

$(ProgramW6432)\Microsoft SDKs\Speech\v11.0\Lib;

to the first place of the project's library directories.

"Configuration Properties" -> "VC++ directories" ->

"Library directory" -> Add $(ProgramW6432)\Microsoft SDKs\Speech\v11.0\Lib; to the beginning of characters.

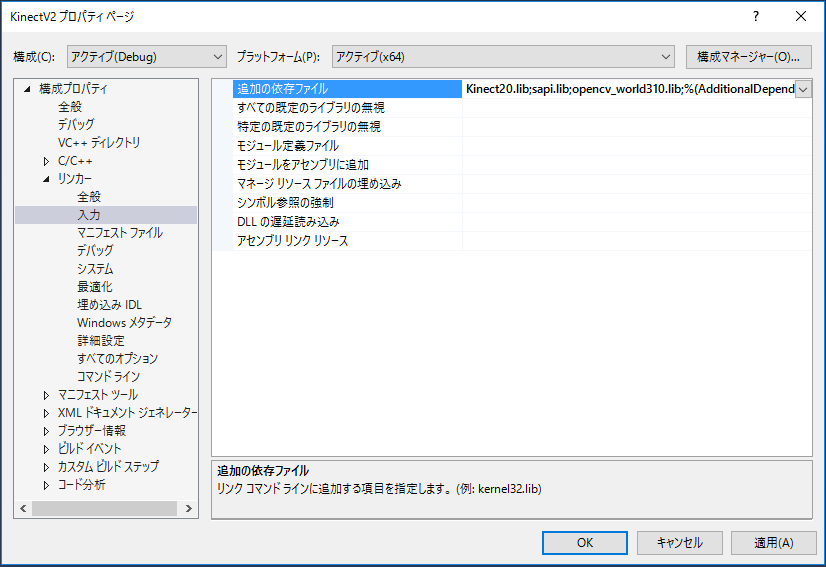

In the setting of the project properties, add the sapi.lib library.

"Configuration properties" -> "Linker" -> "Input" -> "Additional dependency files" -> Add sapi.lib

Define USE_SPEECH constant before including NtKinect.h.

Since the speech recognition program operates in multi-threading,

For the result type of speech recognition by Microsoft's Speech SDK is wchar_t, NtKinect holds the result as wstring data type. Because a wstring data is not displayed in cout, so in this example it is outputed to wcout. Be careful as wcout will not work unless you set locale.

std::wcout.imbue(std::locale("")); // set locale

Please note that the literal of wstring is prefixed with 'L' like L"EXIT". Also, please avoid using cout and wcout at the same time.

If you do kinect heavy processing, the capture of audio will be intermittent and it will not be able to recognize the speech so well. Therefore, in this example, processing of other sensors of kinect and display of OpenCV window are omitted.

In order to obtain the keyboard input on the console screen in real time, we use _kbhit() and _getch() functions in conio.h.

| main.cpp |

#include <iostream> #include <sstream> #include <conio.h> #define USE_SPEECH #include "NtKinect.h" using namespace std; void doJob() { NtKinect kinect; kinect.startSpeech(); std::wcout.imbue(std::locale("")); while (1) { kinect.setSpeech(); if (kinect.recognizedSpeech) { wcout << kinect.speechTag << L" " << kinect.speechItem << endl; } if (kinect.speechTag == L"EXIT") break; if (_kbhit() && _getch() == 'q') break; } kinect.stopSpeech(); } int main(int argc, char** argv) { try { ERROR_CHECK(CoInitializeEx(NULL, COINIT_MULTITHREADED)); doJob(); CoUninitialize(); } catch (exception &ex) { cout << ex.what() << endl; string s; cin >> s; } return 0; } |

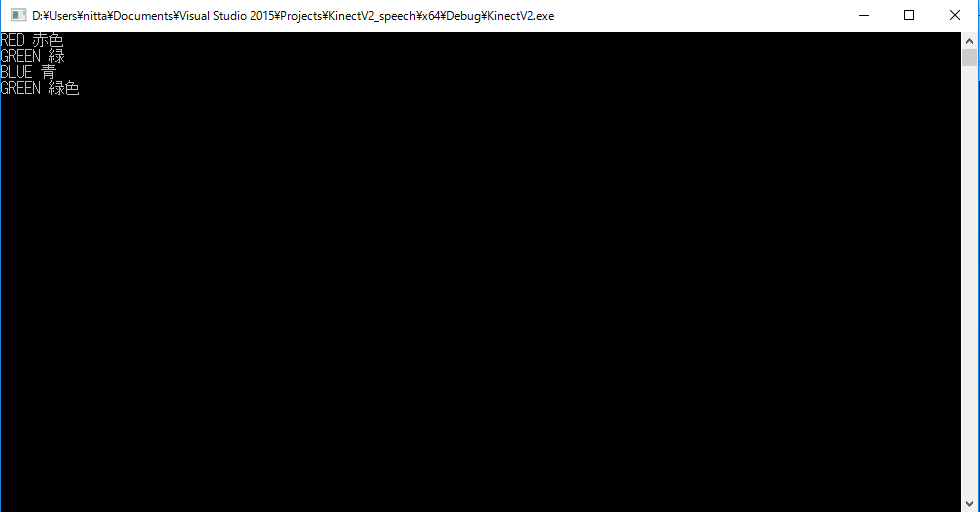

The result of speech recognition is output to the console. In the current grxml, 8 japanese words are registerd, "AKA", "AKAIRO", "AO", "AOIRO", "MIDORI", "MIDORIIRO", "SHUURYOU", "OWARI". Each word means "red", "red color", "blue", "blue color", "green", "green color", "end", "end", respectively. When the word "SHUURYOU" or "OWARI" is recognized, the program ends.

| Grammar_jaJP.grxml |

<?xml version="1.0" encoding="utf-8" ?>

<grammar version="1.0" xml:lang="ja-JP" root="rootRule" tag-format="semantics/1.0-literals" xmlns="http://www.w3.org/2001/06/grammar">

<rule id="rootRule">

<one-of>

<item>

<tag>RED</tag>

<one-of>

<item> 赤 </item>

<item> 赤色 </item>

</one-of>

</item>

<item>

<tag>GREEN</tag>

<one-of>

<item> 緑 </item>

<item> 緑色 </item>

</one-of>

</item>

<item>

<tag>BLUE</tag>

<one-of>

<item> 青 </item>

<item> 青色 </item>

</one-of>

</item>

<item>

<tag>EXIT</tag>

<one-of>

<item> 終わり </item>

<item> 終了 </item>

</one-of>

</item>

</one-of>

</rule>

</grammar>

|

Since the above zip file may not include the latest "NtKinect.h", Download the latest version from here and replace old one with it.

Currently the threshold is 0.3. Let's try to lower it to 0.1 to see how the misrecognition increases.

Let's change the recognition language to English.

NtKinect kinect;

kinect.setSpeechLang("en-US", L"Grammar_enUS.grxml"); // <-- Add this line to recognize English.

kinect.startSpeech();

| Grammar_enUS.grxml |

<?xml version="1.0" encoding="utf-8" ?>

<grammar version="1.0" xml:lang="en-US" root="rootRule" tag-format="semantics/1.0-literals" xmlns="http://www.w3.org/2001/06/grammar">

<rule id="rootRule">

<one-of>

<item>

<tag>RED</tag>

<one-of>

<item> Red </item>

</one-of>

</item>

<item>

<tag>GREEN</tag>

<one-of>

<item> Green </item>

</one-of>

</item>

<item>

<tag>BLUE</tag>

<one-of>

<item> Blue </item>

</one-of>

</item>

<item>

<tag>EXIT</tag>

<one-of>

<item> Exit </item>

<item> Quit </item>

<item> Stop </item>

</one-of>

</item>

</one-of>

</rule>

</grammar>

|

http://nw.tsuda.ac.jp/