Call kinect.setDepth() function to set depth (distance) data to kinect.depthImage. Since no argument is specified, the value of pixel is raw, that is, the distance to the object in millimeters.

| main.cpp |

/*

* Copyright (c) 2017 Yoshihisa Nitta

* Released under the MIT license

* http://opensource.org/licenses/mit-license.php

*/

#include <iostream>

#include <sstream>

#define _USE_MATH_DEFINES

#include <cmath>

#include "NtKinect.h"

using namespace std;

void draw(cv::Mat& img, const vector<double>& v, int start = 0, int n = 1024) {

stringstream ss;

if (start < 0) start = v.size() + start;

if (start < 0) start = 0;

if (start >= v.size()) return;

int end = start + n;

if (end > v.size()) end = v.size();

int m = end - start; // real data number

if (m <= 0) return;

int padding = 30;

double wstep = ((double) img.cols - 2 * padding) / n;

auto Dmin = *min_element(v.begin()+start,v.begin()+end);

auto Dmax = *max_element(v.begin()+start,v.begin()+end);

if (Dmin == Dmax) Dmax = Dmin + 1;

cv::rectangle(img,cv::Rect(0,0,img.cols,img.rows),cv::Scalar(255,255,255),-1);

for (int i=0; i<m; i++) {

int x = (int) (padding + i * wstep);

int y = (int) (padding + (img.rows - 2 * padding) * (v[start+i] - Dmin) / (Dmax-Dmin));

y = img.rows - 1 - y;

cv::rectangle(img,cv::Rect(x-2,y-2,4,4),cv::Scalar(255,0,0),-1);

}

ss.str(""); ss << (int)Dmin;

cv::putText(img,ss.str(),cv::Point(0,img.rows-padding),cv::FONT_HERSHEY_SIMPLEX,0.4,cv::Scalar(0,0,0),1,CV_AA);

ss.str(""); ss << (int)Dmax;

cv::putText(img,ss.str(),cv::Point(0,padding),cv::FONT_HERSHEY_SIMPLEX,0.4,cv::Scalar(0,0,0),1,CV_AA);

}

void DFT(vector<double>& data,vector<double>&ret,int start, int n) {

if (start < 0) start = data.size() + start;

if (start < 0) start = 0;

if (start >= data.size()) start = data.size();

if (start + n > data.size()) n = data.size() - start;

ret.resize(n);

if (n <= 0) return;

vector<double> re(n), im(n);

for (int i=0; i<n; i++) {

re[i] = 0.0;

im[i] = 0.0;

double d = 2 * M_PI * i / n;

for (int j=0; j<n; j++) {

re[i] += data[start+j] * cos(d * j);

im[i] -= data[start+j] * sin(d * j);

}

}

for (int i=0; i<n; i++) ret[i] = sqrt(re[i]*re[i] + im[i]*im[i]);

}

void drawTarget(NtKinect& kinect,cv::Mat& img,int dx,int dy,UINT16 depth) {

int scale = 4;

kinect.rgbImage.copyTo(img);

cv::resize(img,img,cv::Size(img.cols/scale,img.rows/scale),0,0);

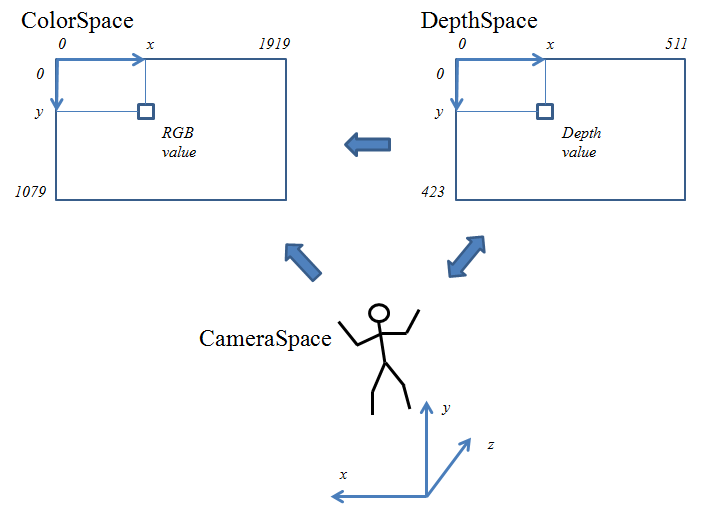

DepthSpacePoint dp; dp.X = (float)dx; dp.Y = (float)dy;

ColorSpacePoint cp;

kinect.coordinateMapper->MapDepthPointToColorSpace(dp,depth,&cp);

cv::rectangle(img,cv::Rect((int)cp.X/scale-10,(int)cp.Y/scale-10,20,20),cv::Scalar(0,0,255),2);

stringstream ss;

ss << dx << " " << dy << " " << depth << " " << (int)cp.X << " " << (int)cp.Y;

cv::putText(img,ss.str(),cv::Point(10,50),cv::FONT_HERSHEY_SIMPLEX,0.7,cv::Scalar(0,0,255),2,CV_AA);

}

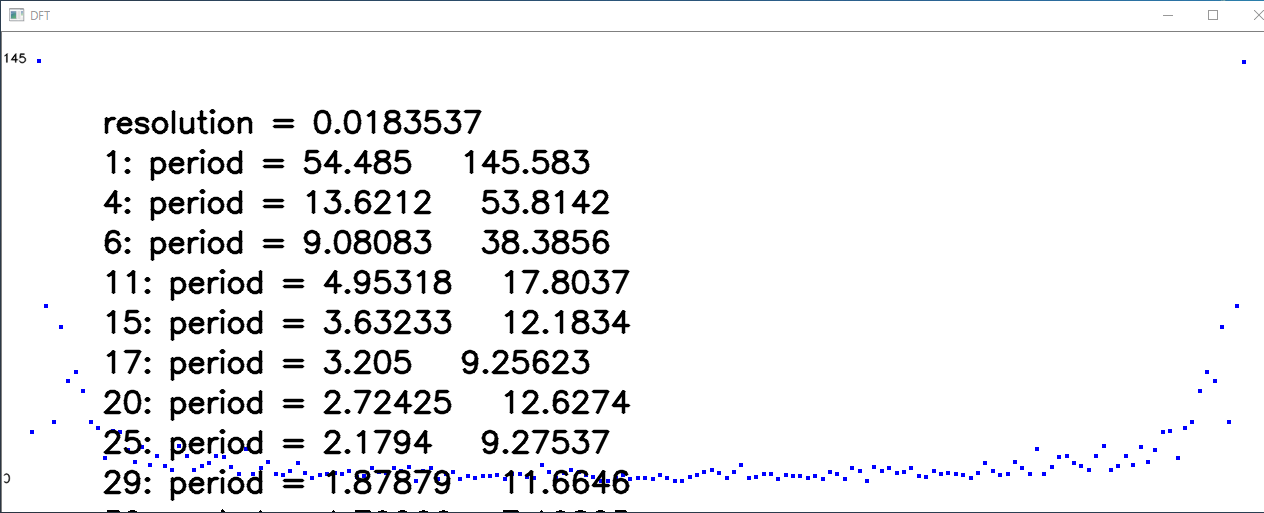

void drawMsg(cv::Mat& img, vector<double>& ret,long dt) {

stringstream ss;

double df = 1000.0 / dt; // frequency resolution (sec)

int y = 100;

ss.str("");

ss << "resolution = " << df ;

cv::putText(img,ss.str(),cv::Point(100,y),cv::FONT_HERSHEY_SIMPLEX,1.0,cv::Scalar(0,0,0),2,CV_AA);

y += 40;

for (int i=1; i<ret.size()/2 -1; i++) {

if (ret[i] > ret[i-1] && ret[i] > ret[i+1]) {

double freq = i * df;

if (freq > 1) continue;

ss.str("");

ss << i << ": period = " << (1.0/freq) << " " << ret[i];

cv::putText(img,ss.str(),cv::Point(100,y),cv::FONT_HERSHEY_SIMPLEX,1.0,cv::Scalar(0,0,0),2,CV_AA);

y += 40;

}

}

}

void doJob() {

const int n_ave = 8; // running average

const long min_period = 32 * 1000; // milliseconds

int n_dft = 512; // sampling number (changed)

NtKinect kinect;

cv::Mat rgb, dImg(480,1280,CV_8UC3);;

vector<double> depth, depth_ave;

double sum = 0;

vector<long> vtime;

vector<double> result(n_dft);

long t0 = GetTickCount();

bool init_flag = false;

for (int count=0; ; count++) {

kinect.setDepth();

int dx = kinect.depthImage.cols / 2;

int dy = kinect.depthImage.rows * 2 / 3;

UINT16 dz = kinect.depthImage.at<UINT16>(dy,dx);

depth.push_back((double)dz);

long t = GetTickCount();

vtime.push_back(t);

sum += (double)dz;

if (depth.size() > n_ave) sum -= depth[depth.size()-1-n_ave];

if (depth.size() >= n_ave) {

depth_ave.push_back(sum/n_ave);

}

kinect.setRGB();

drawTarget(kinect,rgb,dx,dy,dz);

cv::imshow("rgb", rgb);

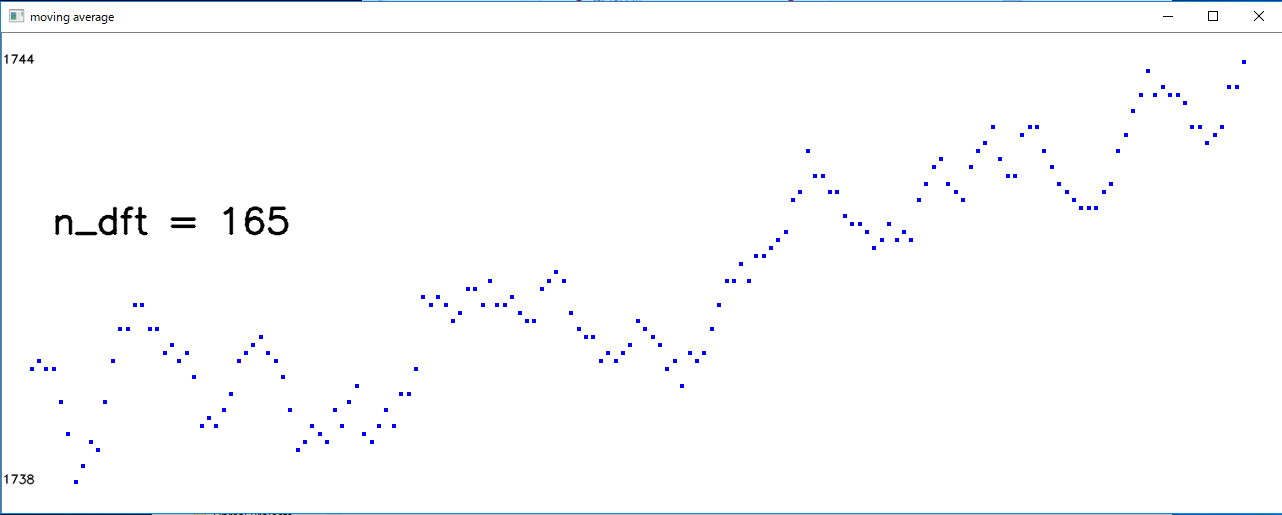

draw(dImg, depth_ave, -n_dft, n_dft);

if (init_flag) {

stringstream ss;

ss << "n_dft = " << n_dft;

cv::putText(dImg,ss.str(),cv::Point(50,200),cv::FONT_HERSHEY_SIMPLEX,1.2,cv::Scalar(0,0,0),2,CV_AA);

}

cv::imshow("moving average",dImg);

if (t - t0 >= min_period) {

if (init_flag == false) {

//n_dft = (int) pow(2.0, (int)ceil(log2((double) depth_ave.size())));

n_dft = depth_ave.size();

init_flag = true;

}

} else {

if (n_dft < depth_ave.size()) n_dft *= 2;

}

if (init_flag) {

auto Dmin = *min_element(depth_ave.end()-n_dft,depth_ave.end());

auto Dmax = *max_element(depth_ave.end()-n_dft,depth_ave.end());

auto Dmid = (Dmin + Dmax) / 2.0;

for (int i=0; i<n_dft; i++) {

result[i] = depth_ave[depth_ave.size()-n_dft+i] - Dmid;

}

DFT(result,result,0,n_dft);

draw(dImg,result,0,result.size());

drawMsg(dImg,result,t - vtime[vtime.size()-n_dft]);

cv::imshow("DFT",dImg);

}

if (depth_ave.size() > 10 * n_dft) {

depth.erase(depth.begin(), depth.end()-n_dft*2);

depth_ave.erase(depth_ave.begin(), depth_ave.end()-n_dft*2);

vtime.erase(vtime.begin(), vtime.end()-n_dft*2);

}

auto key = cv::waitKey(1);

if (key == 'q') break;

}

cv::destroyAllWindows();

}

int main(int argc, char** argv) {

try {

doJob();

} catch (exception &ex) {

cout << ex.what() << endl;

string s;

cin >> s;

}

return 0;

}

|

[Caution] Run this program in Debug mode in Visual Studio 2017. In Release mode, for some reason, it may crash during the process. Programs in Debug mode must link opencv_world330d.lib as an OpenCV library.

To tell the truth, DFFT is not appropriate methods to compute breath period. The reason is as follows.

Looking at the execution example, since $N_s = 165$ pieces of measurement data are obtained at $T = 30$ seconds in this example, the sampling frequency is $\displaystyle f_s = \frac{N_s}{T} = \frac{165}{30} = 5.5 Hz $ , that is, sampling is performed 5.5 times per second. I ran it on the MacBook Pro, but it's pretty slow. The decomposition ability of the sampleling period is $\displaystyle \Delta f = \frac{1}{T} = \frac{1}{30} = 0.0333\cdots $. When the discrete Fourier transform is performed on this data, it is decomposed into the waves of frequencies of $\Delta f$, $2 \Delta f$, $\cdots$, $\displaystyle \frac{N_s}{2} \Delta f$, that is, $\displaystyle \frac{1}{30}, \frac{2}{30}, \frac{3}{30}, \frac{4}{30}, \cdots$ Hz and the period of the reciprocal of the frequency, so $\displaystyle 30, 15, 7.5, 3.25, 1.125, 0.5625, \cdots$ seconds. If the breath is a cycle of about 2 seconds, the waves around here are very sparse, so sampling for a longer period is necessary to give meaningful values.

Therefore, it is considered that another method other than FFT is appropriate for calculating the breath cycle period. I will not discuss here which method is appropriate to calculate breath cycle period.

Since the above zip file may not include the latest "NtKinect.h", Download the latest version from here and replace old one with it.