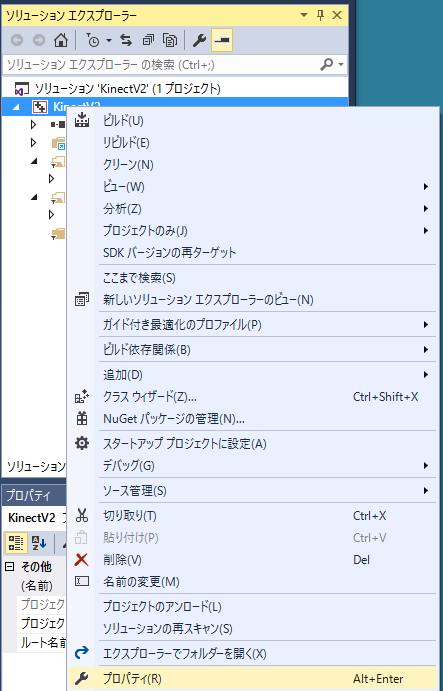

Right-click on the project name in Solution Explorer and select Properties from the menu.

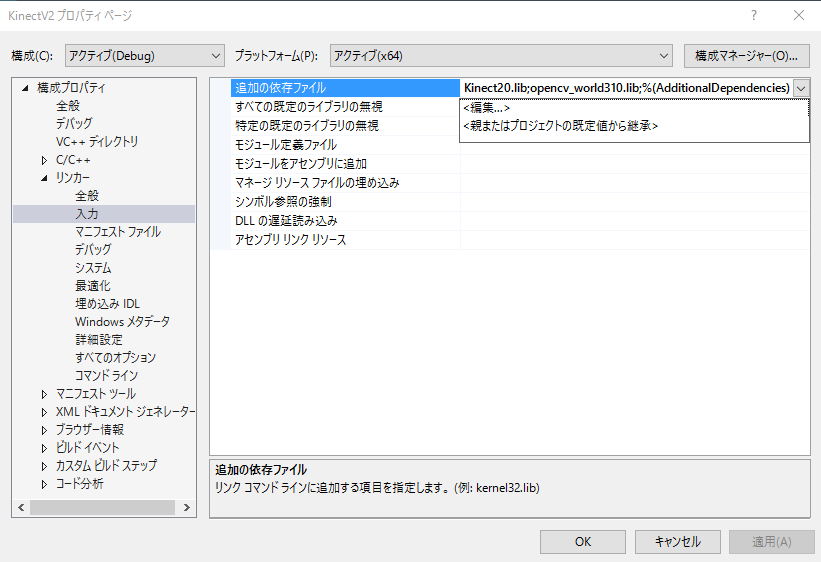

Select "Additional dependency file" in "Input" of "Linker" in "Configuration Properties" and select "Edit".

Add Kinect20.Face.lib.

xcopy "$(KINECTSDK20_DIR)Redist\Face\x64" "$(OutDir)" /e /y /i /r

Define the USE_FACE constant before including NtKinect.h.

Call the kinect.setSkeleton() function. However each joint of kinect.skeleton is displayed on RGB image in this example, you can omit the process. It is important to recognize skeleton by calling kinect.setSkeleton() function before face recognition.

Then, call kinect.setFace() function. Since the values of kinect.faceRect, kinect.facePoint, kinect.faceProperty, and kinect.faceDirection are set, you can use them as necessary.

| main.cpp |

#include <iostream> #include <sstream> #define USE_FACE #include "NtKinect.h" using namespace std; void doJob() { NtKinect kinect; while (1) { kinect.setRGB(); kinect.setSkeleton(); for (auto person : kinect.skeleton) { for (auto joint : person) { if (joint.TrackingState == TrackingState_NotTracked) continue; ColorSpacePoint cp; kinect.coordinateMapper->MapCameraPointToColorSpace(joint.Position,&cp); cv::rectangle(kinect.rgbImage, cv::Rect((int)cp.X-5, (int)cp.Y-5,10,10), cv::Scalar(0,0,255),2); } } kinect.setFace(); for (cv::Rect r : kinect.faceRect) { cv::rectangle(kinect.rgbImage, r, cv::Scalar(255, 255, 0), 2); } for (vector<PointF> vf : kinect.facePoint) { for (PointF p : vf) { cv::rectangle(kinect.rgbImage, cv::Rect((int)p.X-3, (int)p.Y-3, 6, 6), cv::Scalar(0, 255, 255), 2); } } cv::imshow("rgb", kinect.rgbImage); auto key = cv::waitKey(1); if (key == 'q') break; } cv::destroyAllWindows(); } int main(int argc, char** argv) { try { doJob(); } catch (exception &ex) { cout << ex.what() << endl; string s; cin >> s; } return 0; } |

Recognized face information is displayed on the RGB image.

In this example, the rectangular area of the face is displayed with a cyan square, and a yellow rectangle is written at the position of face parts (left eye, right eye, nose, left end of mouse, right end of mouse).

Since the above zip file may not include the latest "NtKinect.h", Download the latest version from here and replace old one with it.