How to make Kinect v2 gesture recognition as DLL and use it from Unity

2016.12.13: created by

2017.10.07: revised by

To Table of Contents

Prerequisite knowledge

Creating Dynamic Link Library using Gesture Recognition with Kinect V2

In "

NtKinect: How to make Kinect V2 program as DLL and using it from Unity

",

it is explained how to create a DLL file that uses the basic functions of OpenCV and Kinect V2 via NtKinect,

and use it from other homebrew programs and Unity.

Well, the programs that recognize face, speech and gesture with Kinect V2

require some additional DLL files corresponding to each function at execution time.

Here, I will explain

how to make your own NtKinect program as a DLL, even if the program uses the Kinect V2 function

which needs other DLLs and setting files,

and

How to use your NtKinect DLL from other languages/environments as C#/Unity, for example.

In this article, we will explain how to create a DLL file for gesture recognition.

How to write program

Let's craete a DLL library that perform gesture recognition

as an example of Kinect V2 functions that actually requieres other DLLs and additional files.

- Start using

the Visual Studio 2017's project

KinectV2_dll.zip

of

"How to make Kinect V2 program as DLL and using it from Unity" .

-

In the following explanation,

it is assumed that the folder name of the project is changed to "NtKinectDll4".

It should have the following file structure.

There may be some folders and files other than those listed, but ignore them now.

NtKinectDll4/NtKinectDll.sln

x64/Release/

NtKinectDll/dllmain.cpp

NtKiect.h

NtKiectDll.h

NtKiectDll.cpp

stdafx.cpp

stdafx.h

targetver.h

|

- Replace NtKinect.h to the latest version.

- Configure settings for include files and libraries from project's properties.

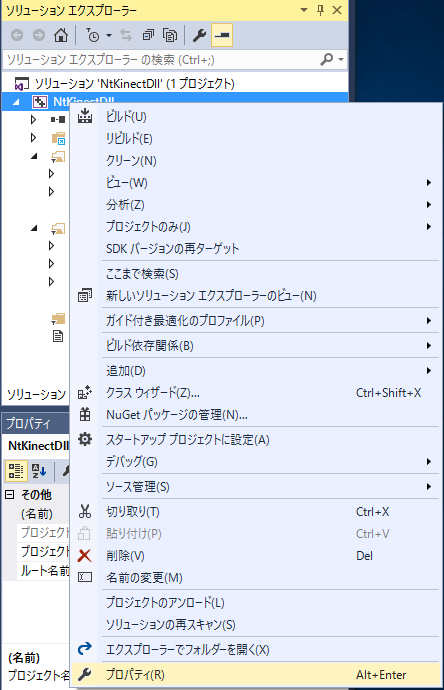

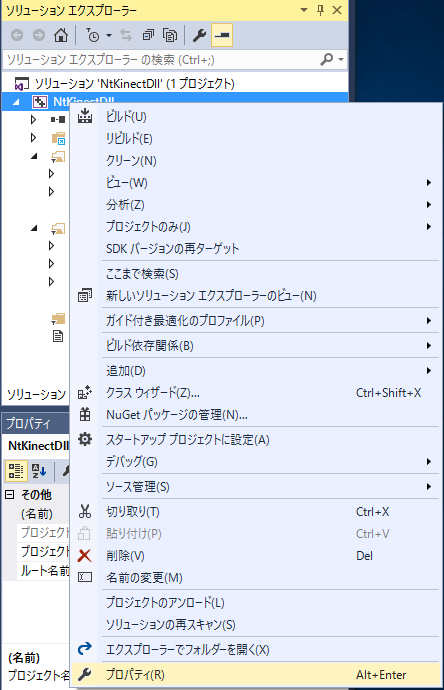

-

In the Solution Explorer, right-click over the project name and select "Properties" in the menu.

-

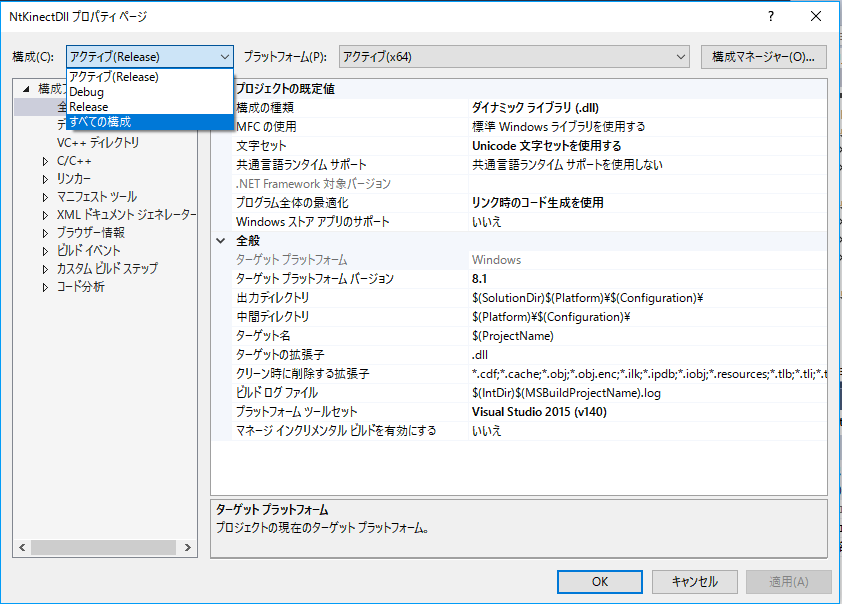

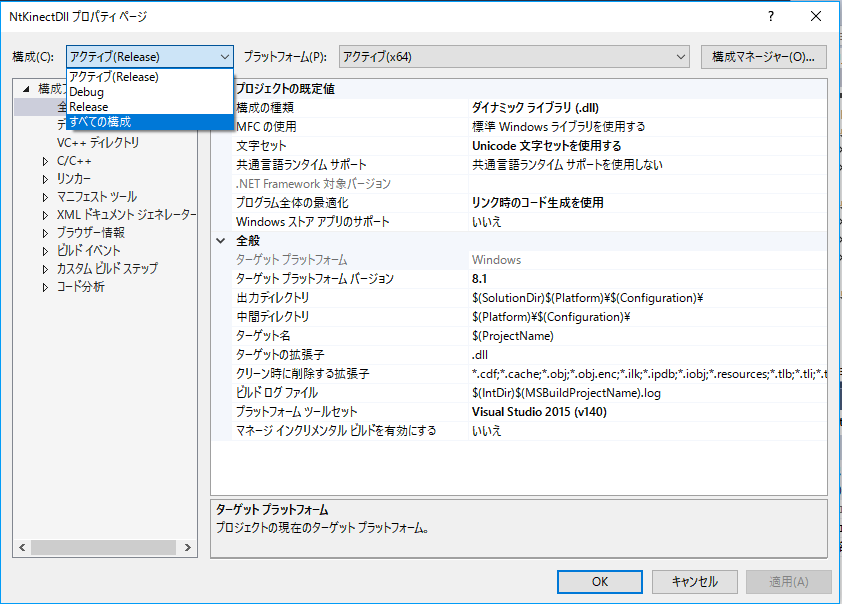

Make settings at the state of

Configuration: "All Configuration",

Platform: "Acvive (x64)".

By doing this, you can configure "Debug" and "Release" mode at the same time.

Of course, you can change the settings separately.

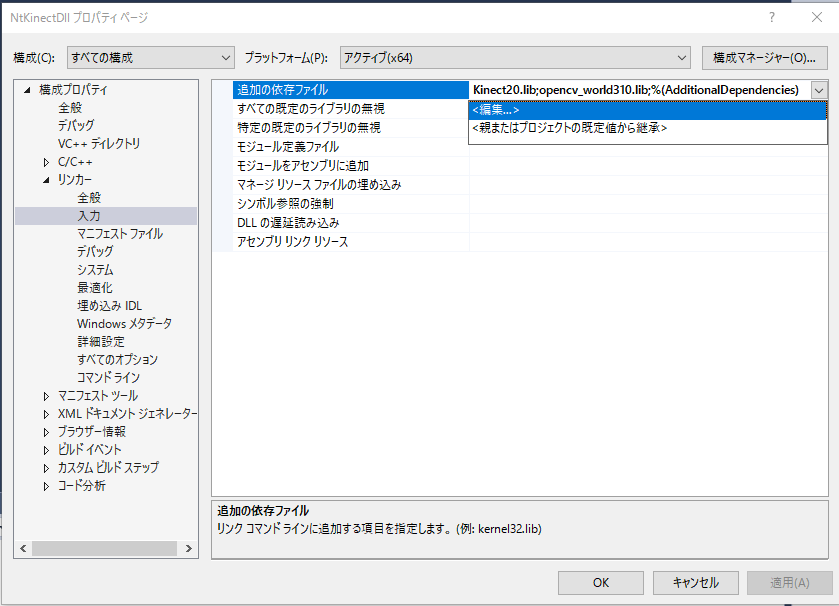

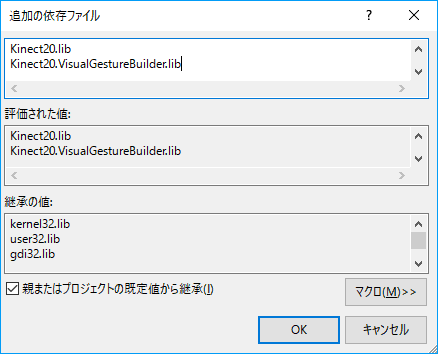

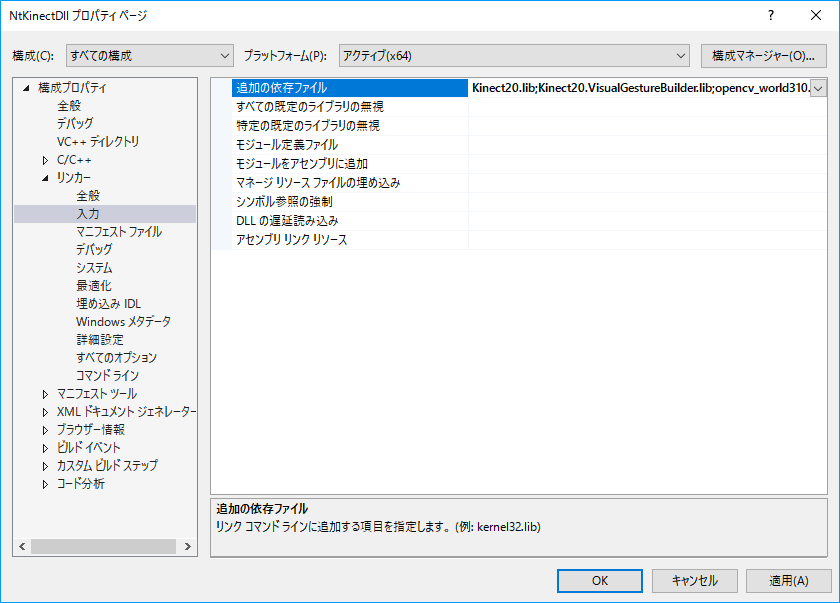

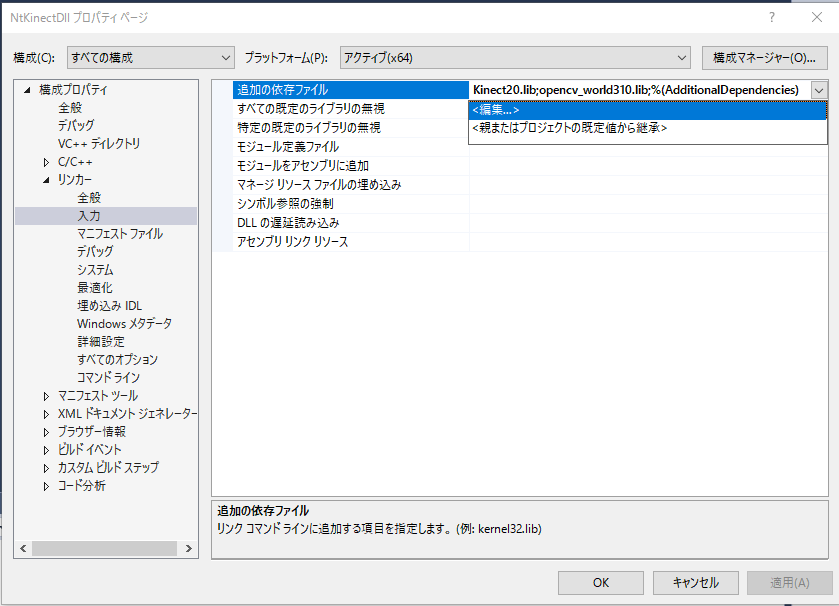

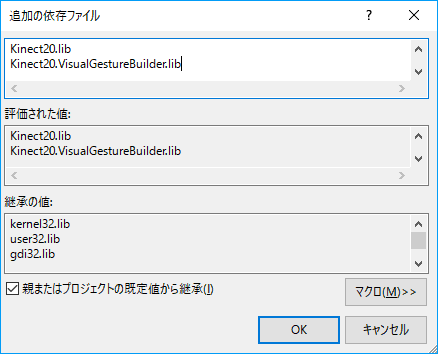

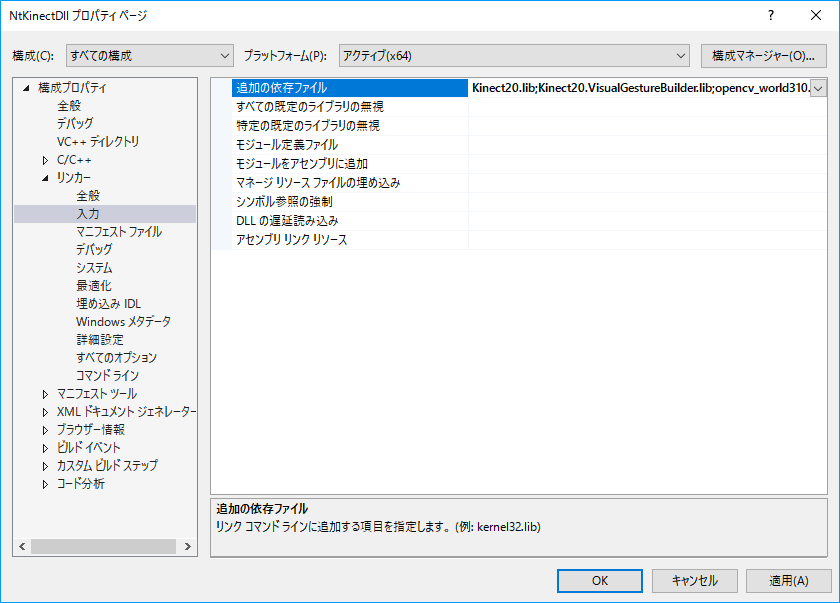

- Add the gesture recognition library to link.

"Configuration Properties" -> "Linker" -> "General" -> "Input"

Kinect20.VisualGestureBuilder.lib

-

Write the declaration in the header file.

The name of the header file is "ProjectName.h", in this case

it will be "NtKinectDll.h".

The red color part is the prototype declaration of the function added this time.

| NtKinectDll.h |

#ifdef NTKINECTDLL_EXPORTS

#define NTKINECTDLL_API __declspec(dllexport)

#else

#define NTKINECTDLL_API __declspec(dllimport)

#endif

namespace NtKinectGesture {

extern "C" {

NTKINECTDLL_API void* getKinect(void);

NTKINECTDLL_API void setGestureFile(void* ptr, wchar_t* filename);

NTKINECTDLL_API int setGestureId(void* ptr, wchar_t* name, int id); // id: non-zero

NTKINECTDLL_API void setGesture(void* ptr);

NTKINECTDLL_API int getDiscreteGesture(void* ptr, int *gid, float *confidence);

NTKINECTDLL_API int getContinuousGesture(void* ptr, int *gid, float *progress);

NTKINECTDLL_API void stopKinect(void* ptr);

}

std::unordered_map<std::string, int> gidMap;

}

|

-

The function is described in "ProjectName.cpp".

In this case it will be "NtKinectDll.cpp".

Define USE_GESTURE constant before including NtKinect.h

and add the definition of the functions.

To recognize gesture,

define USE_GESTURE constant before including NtKinect.h.

If you define USE_GESTURE, the program must be linked

"Kinect20.VisualGestureBuilder.lib" library.

The basic policy to write NtKinectDll.cpp is as follows.

-

Gesture recognized on the DLL (C++) side are tranmitted to Unity (C#) side by ID number.

Frequent exchange of character string data between Unity and DLL will make execution performance worse.

Therefore, Unity side defines "Gesture ID Number" as positive integer at the begining,

and tells it to the DLL side.

And when the DLL side recognizes the gesture,

it notifies the gesture type by "Gesture ID Nubmer" to the Unity side.

In this way, mapping from gesture name to id number is needed in DLL side,

but by using std::unordered_map<string,int> of C++14

we can efficiently implement the mapping.

The ID number of such a gesture whose id number is not defined is 0.

| variable declaration in header file |

|---|

std::unordered_map<std::string, int> gidMap;

|

| the code adding string->int mapping in setGestureId() |

|---|

gidMap[s] = id; // <-- s is a string of C++ and id is a positive integer.

|

| the code referring string->int mapping in getDiscreteGestureId() |

|---|

gid[i] = gidMap[gname] // <-- returns int for gname. returns 0 if the name is not regstered.

|

-

The character encoding of the string passed from Unit (C#) to DLL (C++) is all UTF16.

For DLL functions, strings are received as wchar_t * type.

When type conversion is needed,

convert it into UTF8 on the DLL side.

|

The code onverting UTF16 wchar_t* type name into UTF8 string type nameBuffer

|

|---|

int len = WideCharToMultiByte(CP_UTIF8, NULL, name, -1, NULL, 0, NULL, NULL) + 1; // <-- Find the number of bytes of the converted characters.

char* nameBuffer = new char[len]; // <-- Allocate memory for the UTF8 string

memset(nameBuffer, '\0', len); // <-- Fill the area with NULL character

WideCharToMultiByte(CP_UTIF8, NULL, name, -1, nameBuffer, 0, NULL, NULL) + 1; // <-- convert from UTF16 to UTF8, and write it in nameBuffer

|

-

Unity (C#) side prepares an area to write recognized gesture data.

We want to use the recognized gesture data on the Unity side,

so prepare the data area on the Unity side.

On the DLL side, data to be returned to the Unity side

will be written as an element of a one-dimensional array area.

-

Gesture recognition is done by call a single function,

but the recognized discrete gesture and continuous gesture are

returned separately.

In the function that returns a recognized gesture,

it is useful that the number of recognized gesture is the return value.

However, since the number of discrete gestures and that of continuous gestures recognized

are different generally,

we will return each as a different function this time.

Gesture recognition itself is done simultaneously by one function call.

-

Prepare the stopKinect() function to destroy all the OpenCV windows.

Since the window displayed by OpenCV is operated by another thread,

it may survive and continue using the NtKinectDll.dll file,

even if the Unity application terminates.

This is troublesome because it is necessary to restart Unity when trying to replace NtKinectDll.dll.

Therefore, we prepare a function to destroy all the windows of OpenCV,

and call it when the Unity application terminates.

-

When gesture is recognized, trackingId corresponding to gesture can be obtained,

but his time we will not pass this data to Unity side.

By comparing the trackingId of the skeleton with trackingId of gesture,

you can see which skeleton do the gesture.

However, this time we will not use the information of gesture's trackingId in favor of

easiness of understanding.

Only when sending skeleton information to Unity side,

there is a meaning to use gesture trackingId.

| NtKinectDll.cpp |

#include "stdafx.h"

#include <unordered_map>

#include "NtKinectDll.h"

#define USE_GESTURE

#include "NtKinect.h"

using namespace std;

namespace NtKinectGesture {

NTKINECTDLL_API void* getKinect(void) {

NtKinect* kinect = new NtKinect;

return static_cast<void*>(kinect);

}

NTKINECTDLL_API void setGestureFile(void* ptr, wchar_t* filename) {

NtKinect *kinect = static_cast<NtKinect*>(ptr);

wstring fname(filename);

(*kinect).setGestureFile(fname);

}

NTKINECTDLL_API int setGestureId(void* ptr, wchar_t* name, int id) {

int len = WideCharToMultiByte(CP_UTF8,NULL,name,-1,NULL,0,NULL,NULL) + 1;

char* nameBuffer = new char[len];

memset(nameBuffer,'\0',len);

WideCharToMultiByte(CP_UTF8,NULL,name,-1,nameBuffer,len,NULL,NULL);

string s(nameBuffer);

gidMap[s] = id;

return id;

}

NTKINECTDLL_API void setGesture(void* ptr) {

NtKinect *kinect = static_cast<NtKinect*>(ptr);

(*kinect).setRGB();

(*kinect).setSkeleton();

int scale = 4;

cv::Mat img((*kinect).rgbImage);

cv::resize(img,img,cv::Size(img.cols/scale,img.rows/scale),0,0);

for (auto person: (*kinect).skeleton) {

for (auto joint: person) {

if (joint.TrackingState == TrackingState_NotTracked) continue;

ColorSpacePoint cp;

(*kinect).coordinateMapper->MapCameraPointToColorSpace(joint.Position,&cp);

cv::rectangle(img, cv::Rect((int)cp.X/scale-2, (int)cp.Y/scale-2,4,4), cv::Scalar(0,0,255),2);

}

}

cv::imshow("rgb",img);

cv::waitKey(1);

(*kinect).setGesture();

}

NTKINECTDLL_API int getDiscreteGesture(void* ptr, int *gid, float *confidence) {

NtKinect *kinect = static_cast<NtKinect*>(ptr);

for (int i=0; i<(*kinect).discreteGesture.size(); i++) {

auto g = (*kinect).discreteGesture[i];

string gname = (*kinect).gesture2string(g.first);

gid[i] = gidMap[gname];

confidence[i] = g.second;

}

return (*kinect).discreteGesture.size();

}

NTKINECTDLL_API int getContinuousGesture(void* ptr, int *gid, float *progress){

NtKinect *kinect = static_cast<NtKinect*>(ptr);

for (int i=0; i<(*kinect).continuousGesture.size(); i++) {

auto g = (*kinect).continuousGesture[i];

string gname = (*kinect).gesture2string(g.first);

gid[i] = gidMap[gname];

progress[i] = g.second;

}

return (*kinect).continuousGesture.size();

}

NTKINECTDLL_API void stopKinect(void* ptr) {

cv::destroyAllWindows();

}

} |

In the above NtKinectDll.cpp codes,

the character color is basically based on the following policy.

green characters: Parts related to DLL creation in Visual Studio

blue characters: Parts defining DLL functions for gesture recognition

red characters: Parts using NtKinect and OpenCV

magenta characters: Parts related to data conversion (marshaling) between C# and C++

-

Compile with "Application configuration" set to "Release".

NtKinectDll.lib and NtKinectDll.dll are generated in the folder KinectV2_dll2/x64/Release/.

[Caution](Oct/07/2017 added)

If you encounter "dllimport ..." error when building with Visual Studio 2017 Update 2,

please

refer to here

and deal with it

to define

NTKINECTDLL_EXPORTS

in NtKinectDll.cpp.

-

Please click here for this sample project NtKinectDll4.zip。

Since the above zip file may not include the latest "NtKinect.h",

Download the latest version from here

and replace old one with it.

Use NtKinect DLL from Unity

Let's use

NtKinectDll.dll

in Unity.

-

For more details on how to use DLL with Unity, please see

the official manual

.

-

The data of Unity(C#) is managed

which means that the data may be moved by the Gabage Collector of C#.

And the data of DLL (C++) is unmanged

which means that the data is note moved.

In order to pass data between C# and C++,

it is necessary to convert the data state.

To do this,

the functions of

System.Runtime.InteropServices.Marshal

Class in C# can be used.

-

For details on how to pass data between different languages,

refer to the

"

Interop Marshaling

"

section of the

"

Interoperating with Unmanaged Code

" at MSDN.

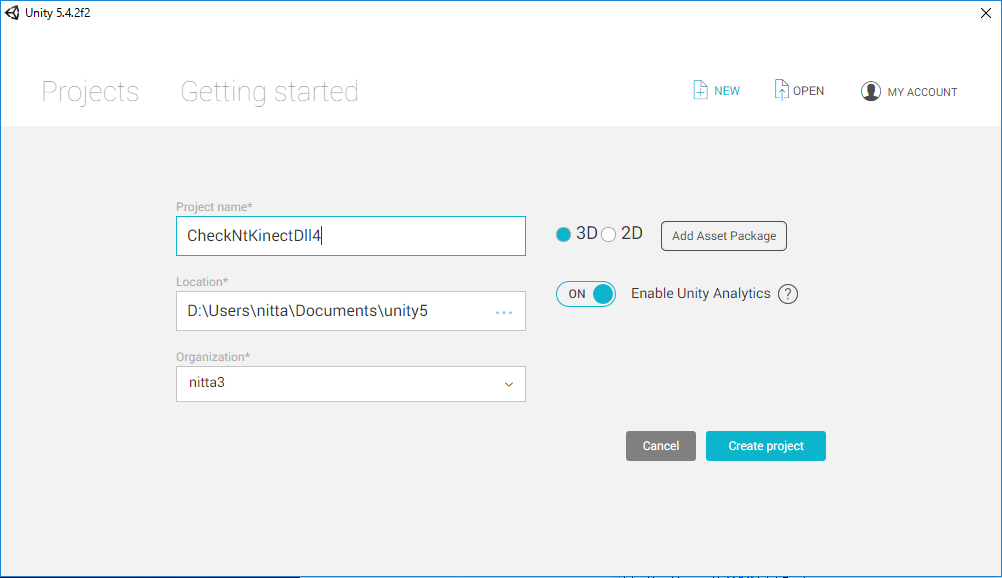

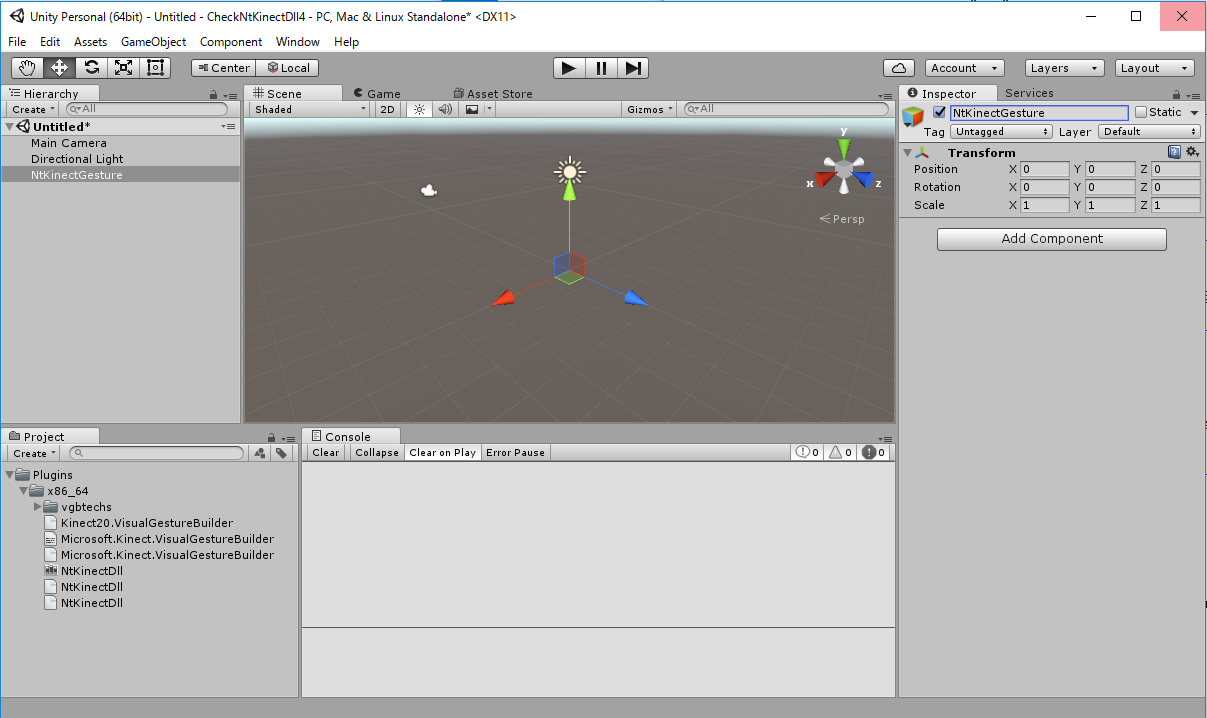

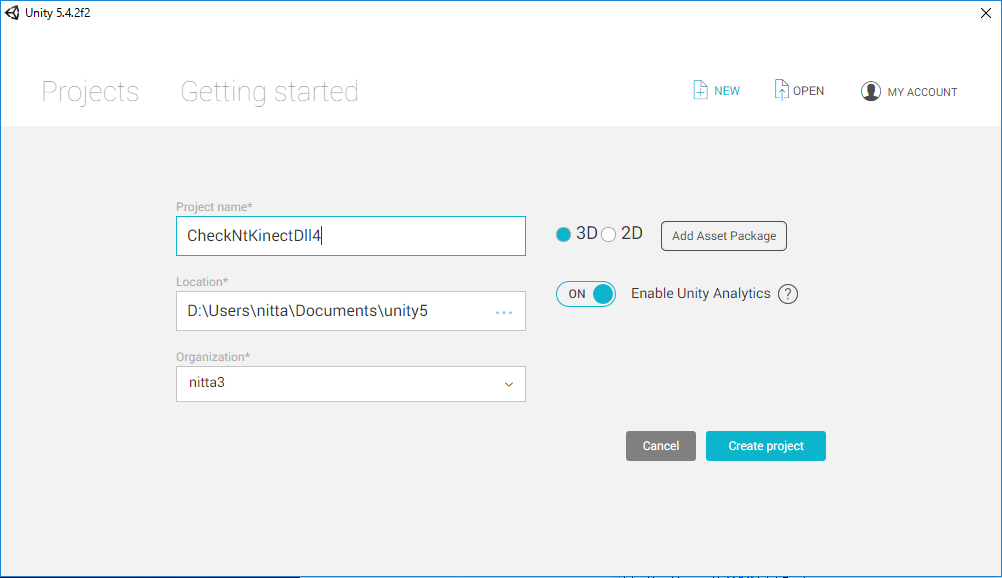

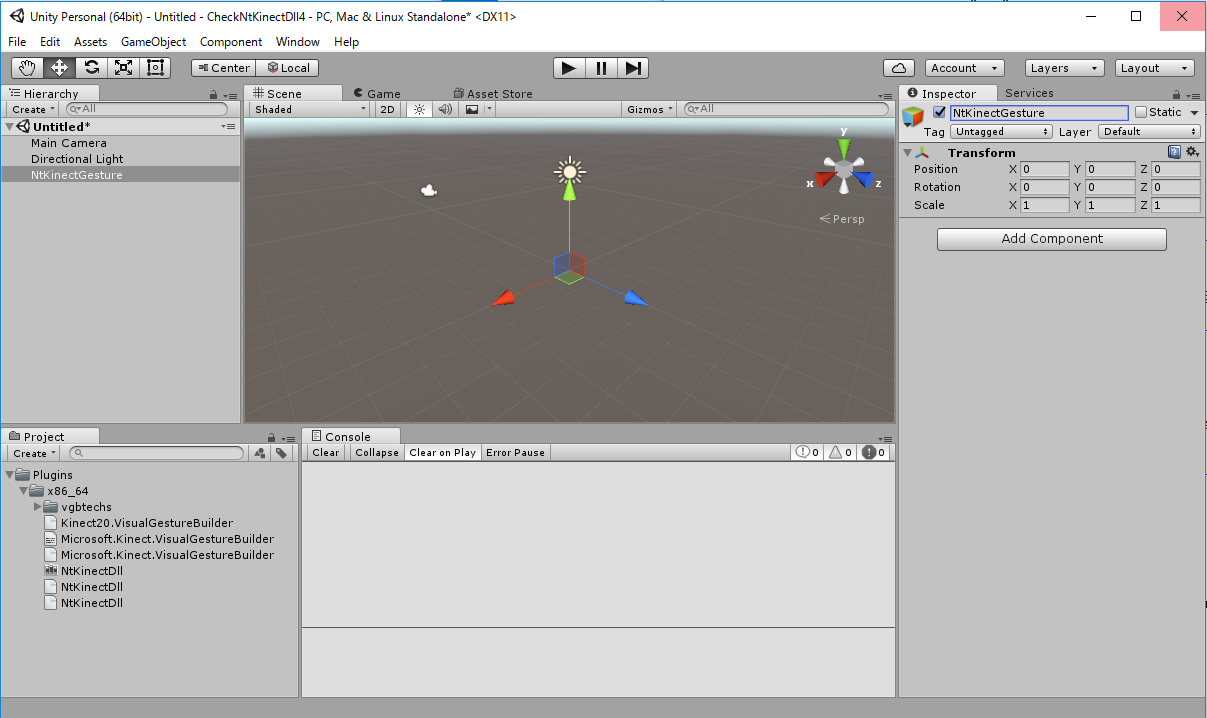

- Start a new Unity project.

-

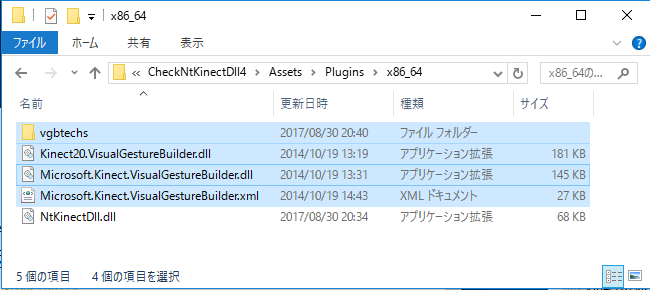

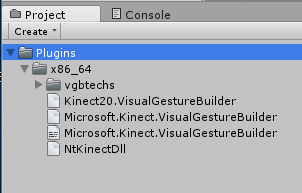

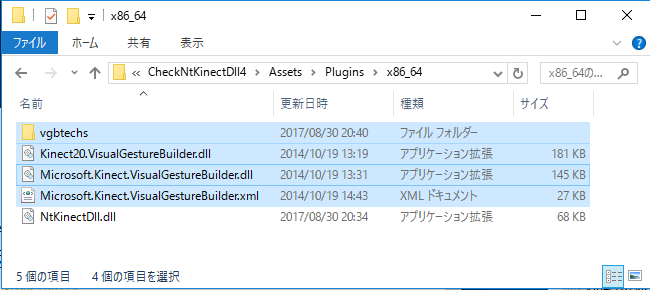

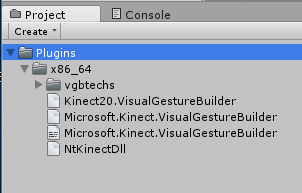

Copy

NtKinectDll.dll

to the project folder

Assets/Plugins/x86_64/

.

And copy

all the file under the $(KINECTSDK20_DIR)Redist\VGB\x64\

to the project folder

Assets/Plugins/x86_64/

.

[Notice]

$(KINECTSDK20_DIR) is set to "C:\Program Files\Microsoft SDKs\Kinect\v2.0_1409\"

in my environment.

Please read according to your own environment.

-

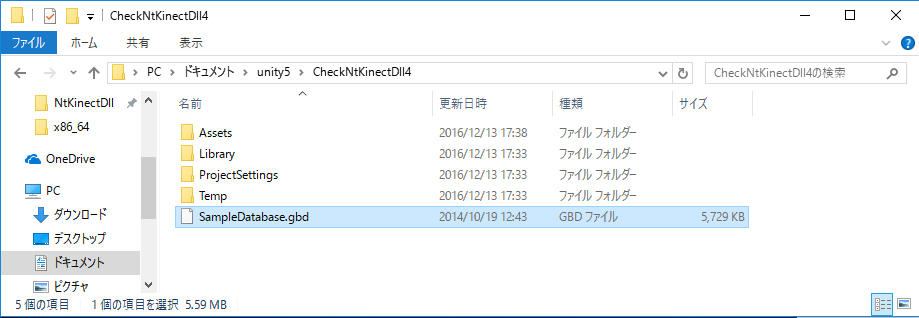

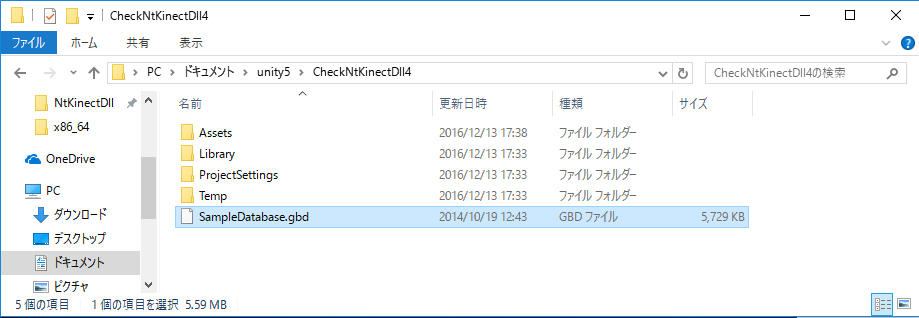

Copy the gesture classifier file ".gbd" and ".gba" to the folder directly under the Unity project.

Let's use

$(KINECTSDK20_DIR)Tools\KinectStudio\databases\SampleDatabase.gbd

which we used at "

NtKinect: How to recognize gesture with Kinect V2

".

Copy this file directly under Unity's project folder.

CheckNtKinectDll4/SampleDatabase.gbd

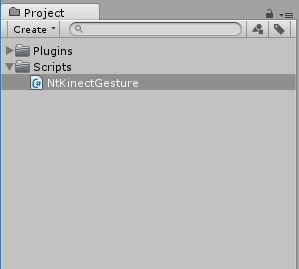

- Place the Empty Object in the scene and change its name to NtKinectGesture.

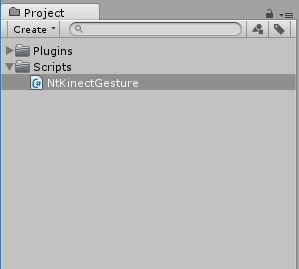

- Create C# script under the Project's Assets/Scripts/

From the top menu, "Assets"-> "Create" -> "C# Script" -> Filename is "NtKinectGesture"

NtKinectGesture.cs is created in the "Assets/Scripts/" folder of the project.

The basic policy to describe NtKinectGesture.cs is as follows.

-

The pointers in C++ are treated as System.IntPtr in C#.

-

Unity side defines "Gesture ID Number" as positive integer at the begining,

and tells it to the DLL side.

-

The character encoding of the string passed from Unit (C#) to DLL (C++) is all UTF16.

The string of Unity (C#) is managed, and the string and wstring of DLL (C++) are unmanaged.

When passing Unity (C#) string as an argument to a function of DLL (C++),

convert it to unmanaged UTF16 string on heap memory using

Marshal.StringToHGlobalUni()

function.

This operation eliminates the danger that data may be moved by the garbase collector of C#.

When the unmanaged data becomes unnecessary,

you must

call

Marshal.

FreeHGlobal() function to free the memory.

-

Unity (C#) side prepares an area to write recognized gesture data.

We want to use the recognized gesture data on the Unity side,

so prepare the data area on the Unity side.

We prepare an array in this example.

When passing an array of C# as an argument to a DLL function,

pin it using GCHandle

so that the data area does not move by the gabage collector of C#.

Please note that the pinned area needs to be released when it is no long needed.

GCHandle gch = GCHandle.Allocate(array on heap, GCHandleType.Pinned) // allocate unmanaged area.

...

gch.AddrOfPinnedObject() // Returns the address of the beginning of the unmanaged area.

...

gch.Free(); // Free unmanaged area.

|

[Notice]

Blittable type

is the simple data type

whose internal representation is the same in both C# and C++.

Data should be passed using the area allocated on the heap

as a one-dimentional array of blittable type.

According to that policy,

you can avoid unnecessary troubles caused by CGHandle.

-

Gesture recognition is done by call a single function,

but the recognized discrete gesture and continuous gesture are

returned separately.

After calling setGesture(), call getDiscreteGesture() and/or getContinuousGesture().

- Finall, call the stopKinet() function so that

the DLL continues to run and use NtKinectDll.dll

even if the execution of the Unity application ends.

-

The number of elements in the array area prepared by Unity side

is set to "max number of gestures types" x "max number of people recognized at a time".

In this example,

there are 3 types of discrete gestures and 1 type of continuous gesture.

6 people may be recognized simultaneously by Kinect.

Therefore,

The variable gestureCount, the number of elements, is set to

max(3,1) * 6 = 18.

| NtKinectGesture.cs |

using UnityEngine;

using System.Collections;

using System.Runtime.InteropServices;

using System;

public class NtKinectGesture : MonoBehaviour {

[DllImport ("NtKinectDll")] private static extern System.IntPtr getKinect();

[DllImport ("NtKinectDll")] private static extern int rightHandState(System.IntPtr kinect);

[DllImport ("NtKinectDll")] private static extern void setGestureFile(System.IntPtr ptr, System.IntPtr filename);

[DllImport ("NtKinectDll")] private static extern int setGestureId(System.IntPtr ptr, System.IntPtr name, int id);

[DllImport ("NtKinectDll")] private static extern void setGesture(System.IntPtr kinect);

[DllImport ("NtKinectDll")] private static extern int getDiscreteGesture(System.IntPtr kinect, System.IntPtr gid, System.IntPtr cnf);

[DllImport ("NtKinectDll")] private static extern int getContinuousGesture(System.IntPtr kinect, System.IntPtr gid, System.IntPtr cnf);

[DllImport ("NtKinectDll")] private static extern void stopKinect(System.IntPtr kinect);

private System.IntPtr kinect;

int gestureCount = 18;

void Start () {

kinect = getKinect();

System.IntPtr gbd = Marshal.StringToHGlobalUni("SampleDatabase.gbd"); // gbd file

System.IntPtr g1 = Marshal.StringToHGlobalUni("Steer_Left"); // discrete

System.IntPtr g2 = Marshal.StringToHGlobalUni("Steer_Right"); // discrete

System.IntPtr g3 = Marshal.StringToHGlobalUni("SteerProgress"); // continuous

System.IntPtr g4 = Marshal.StringToHGlobalUni("SteerStraight"); // discrete

setGestureFile(kinect,gbd);

setGestureId(kinect,g1,1);

setGestureId(kinect,g2,2);

setGestureId(kinect,g3,3);

setGestureId(kinect,g4,4);

Marshal.FreeHGlobal(gbd);

Marshal.FreeHGlobal(g1);

Marshal.FreeHGlobal(g2);

Marshal.FreeHGlobal(g3);

Marshal.FreeHGlobal(g4);

}

void Update () {

setGesture(kinect);

int[] gid = new int[gestureCount];

float[] cnf = new float[gestureCount];

GCHandle gch = GCHandle.Alloc(gid,GCHandleType.Pinned);

GCHandle gch2 = GCHandle.Alloc(cnf,GCHandleType.Pinned);

// discrete gesture

int n = getDiscreteGesture(kinect, gch.AddrOfPinnedObject(), gch2.AddrOfPinnedObject());

for (int i=0; i<n; i++) {

Debug.Log(i + " discrete " + gid[i] + " " + cnf[i]); // "cnf" value is "confidence"

}

// continous gesture

n = getContinuousGesture(kinect, gch.AddrOfPinnedObject(), gch2.AddrOfPinnedObject());

for (int i=0; i<n; i++) {

Debug.Log(i + " continous " + gid[i] + " " + cnf[i]); // "cnf" value is "progress"

}

gch.Free();

gch2.Free();

}

void OnApplicationQuit() {

stopKinect(kinect);

}

}

|

In the above NtKinectGesture.cs list,

character color is basically based on the following policy.

green character: Declaration part for using DLL function in Unity

blue character: Parts related to the DLL's function call

magenta chracter: Parts related to data conversion (marshaling) between C# and C++

-

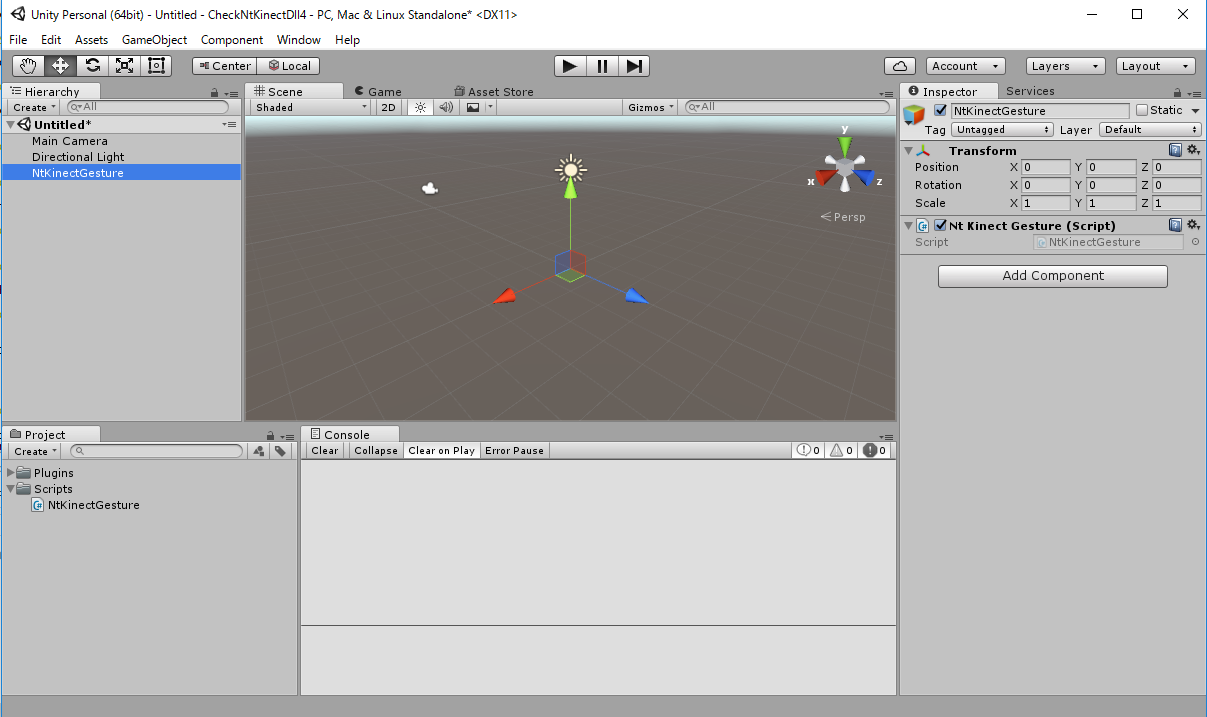

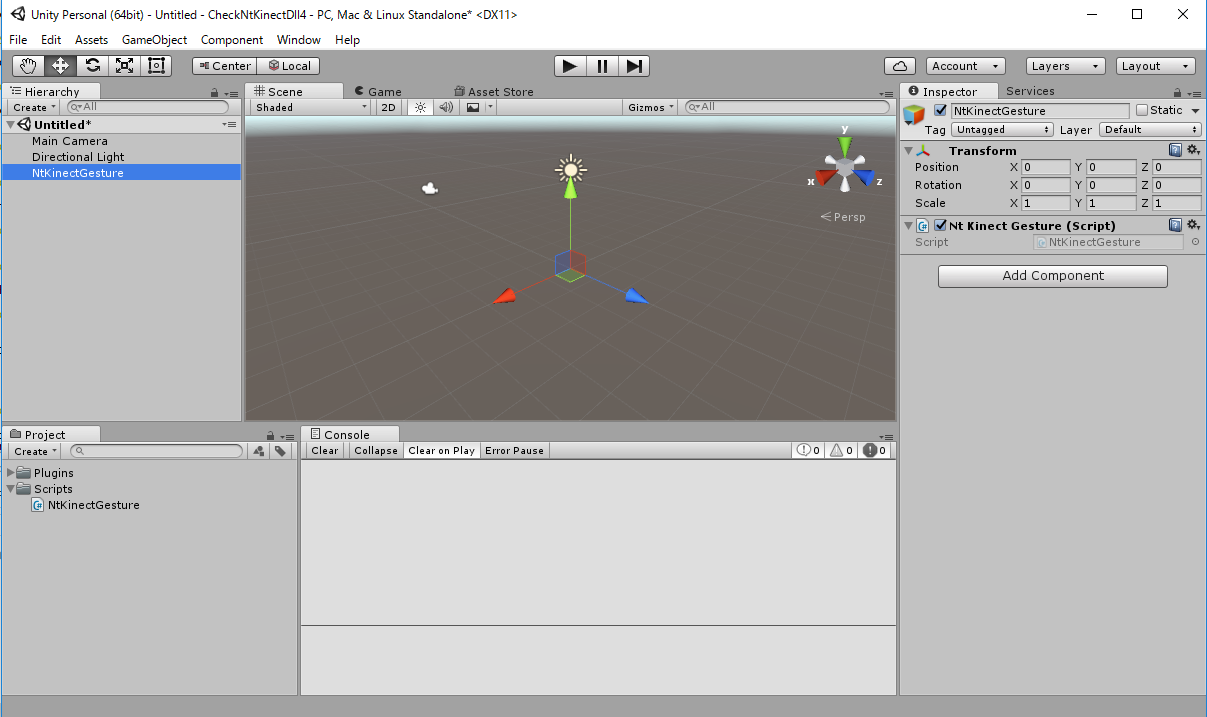

Drag "NtKinectGesture.cs" in Project panel onto "NtKinectGesture" object

in the Hierarchy panel and add it as a Component.

-

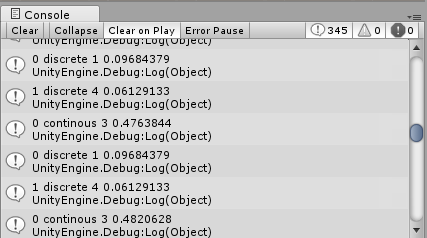

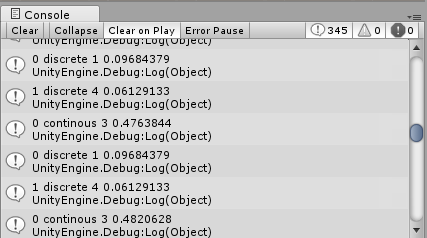

When you run the program,

the recognized gestures are displayed on the console panel.

In this example,

the Game screen does not change.

The recognized gestures are displayed on the console panel as outputs of Debug.Log

There are four types of gestures defined in the "SampleDatabase.gbd" file.

| name | typee | ID Number |

|---|

| Steering_Left | discrete | 1 |

| Steering_Right | discrete | 2 |

| SteeringProgress | continuous | 3 |

| SteeringStraight | discrete | 4 |

The console's first 3 lines in the above figure

means the following state.

0 discrete 1 0.09684379 <-- Steering_Left is recognized with confidence 0.09684379

1 discrete 4 0.06129133 <-- SteeringStraight is recognized with confidence 0.06129133

0 continuous 3 0.4763844 <-- SteeringProgress is recognized with progress 0.4763844

|

[Notice]

In this case ,

SteeringProgress of continuous gesture recognized once,

it seems to remain recognized as long as the skeleton is recognized.

It remains unknown whether this situation is normal or not.

[Notice]

We generates an OpenCV window in DLL to display the skeleton recognition state.

Note that when the OpenCV window is focused,

that is,

when the Unity game window is not focused,

the screen of Unity will not change.

BUT the console panel will change in that situation.

HOWEVER, in this example,

the game screen will not change even if the unity window is focused.

-

Please click here for this Unity sample project CheckNtKinectDll4.zip。

How to Find the Gesture Name in the Gesture Classifier file

In short, you only need to extract the readable characters contained in the gesture

classifier file.

You can also open and examine the classifier file with a text editor which can handle binary.

Here is an example of using the "strings" command of Unix.

In Unix (Linux, MacOS X, cygwin) environment,

there is a "strings" command to retrieve readable strings in the binary.

For example, running "strings" against "SampleDatabase.gbd" on Linux shell

result in the following output.

| Quoted from the output of strings command |

$ strings SampleDatabase.gbd

VGBD

Steer_Left

Steer_Left

AdaBoostTrigger

Steer_Right

Steer_Right

AdaBoostTrigger

SteerProgress

SteerProgress

RFRProgress

SteerStraight

SteerStraight

AdaBoostTrigger

Accuracy Level

33s?"

Number Weak Classifiers at Runtime

Filter Results

...(abbreviated)...

|

Discrete gestures are determined by the AdaBoost algorithm and

continous gestures are determined by the Random-Forest algorithm.

So, in the above gbd file,

you can see that

Steering_Left

Steering_Right

SteeringStraight

as descrete gesture, and

SteeringProgress

as continuous gesture.

[Notice]

In the cygwin environment, the "strings" command may not be installed.

See

here

for additional installation.

http://nw.tsuda.ac.jp/