This project is set as follows.

- link Kinect20.Face.lib library

- copy all the necessary files (Kinect20.Face.dll, etc.) after compilation.

Get the Depth image.

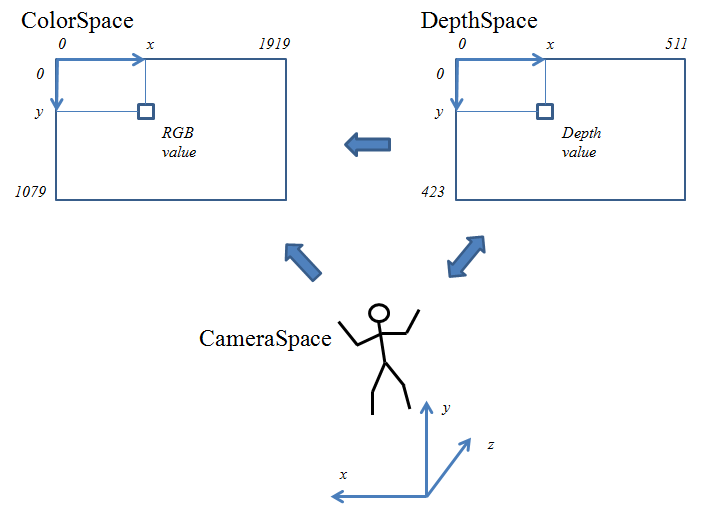

Since the value of each pixel of the Depth image is represented by CV_16UC1 (one unsigned int of 16 bitwidth), it is converted to an image that can be written in RGB color. Each pixel is converted to CV_8UC1 (one unsigned int of 8 bitwidth) once to become the maximum luminance (= 255) at 4500 mm distance, and converted to CV_8UC3 (three unsigned int of 8 bitwidth, BGR format).

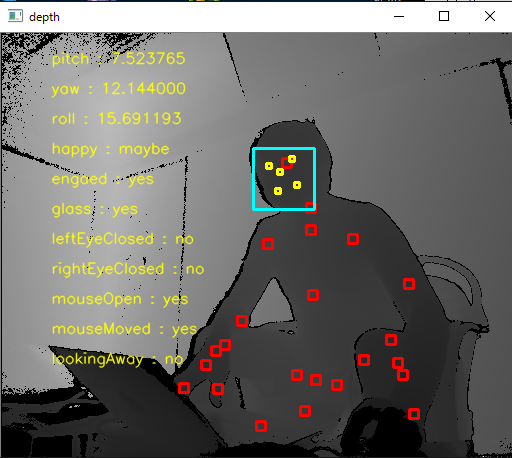

The positions of the face parts are drawn above the Depth image.

| main.cpp |

#include <iostream> #include <sstream> #define USE_FACE #include "NtKinect.h" using namespace std; string faceProp[] = { "happy", "engaed", "glass", "leftEyeClosed", "rightEyeClosed", "mouseOpen", "mouseMoved", "lookingAway" }; string dstate[] = { "unknown", "no", "maybe", "yes" }; void doJob() { NtKinect kinect; cv::Mat img8; cv::Mat img; while (1) { kinect.setDepth(); kinect.depthImage.convertTo(img8,CV_8UC1,255.0/4500); cv::cvtColor(img8,img,cv::COLOR_GRAY2BGR); kinect.setSkeleton(); for (auto person : kinect.skeleton) { for (auto joint : person) { if (joint.TrackingState == TrackingState_NotTracked) continue; DepthSpacePoint dp; kinect.coordinateMapper->MapCameraPointToDepthSpace(joint.Position,&dp); cv::rectangle(img, cv::Rect((int)dp.X-5, (int)dp.Y-5,10,10), cv::Scalar(0,0,255),2); } } kinect.setFace(false); for (cv::Rect r : kinect.faceRect) { cv::rectangle(img, r, cv::Scalar(255, 255, 0), 2); } for (vector<PointF> vf : kinect.facePoint) { for (PointF p : vf) { cv::rectangle(img, cv::Rect((int)p.X-3, (int)p.Y-3, 6, 6), cv::Scalar(0, 255, 255), 2); } } for (int p = 0; p < kinect.faceDirection.size(); p++) { cv::Vec3f dir = kinect.faceDirection[p]; cv::putText(img, "pitch : " + to_string(dir[0]), cv::Point(200 * p + 50, 30), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0,255,255), 1, CV_AA); // rename CV_AA as cv::LINE_AA (in case of opencv3 and later) cv::putText(img, "yaw : " + to_string(dir[1]), cv::Point(200 * p + 50, 60), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0,255,255), 1, CV_AA); cv::putText(img, "roll : " + to_string(dir[2]), cv::Point(200 * p + 50, 90), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0,255,255), 1, CV_AA); } for (int p = 0; p < kinect.faceProperty.size(); p++) { for (int k = 0; k < FaceProperty_Count; k++) { int v = kinect.faceProperty[p][k]; cv::putText(img, faceProp[k] +" : "+ dstate[v], cv::Point(200 * p + 50, 30 * k + 120), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0,255,255), 1, CV_AA); } } cv::imshow("depth", img); auto key = cv::waitKey(1); if (key == 'q') break; } cv::destroyAllWindows(); } int main(int argc, char** argv) { try { doJob(); } catch (exception &ex) { cout << ex.what() << endl; string s; cin >> s; } return 0; } |

The positions of the face parts recognized are drawn as yellow rectangles above the Depth image.

In this example, each person's face orientation and states are displayed at the top of the screen.

At the upper left corner of the window, face orientation (pitch, yaw, roll) and recognized face properties are displayed.

Since the above zip file may not include the latest "NtKinect.h", Download the latest version from here and replace old one with it.